I broke GPT-2: How I proved Semantic Collapse using Geometry (The Ainex Limit)

Source: Dev.to

TL;DR

I forced GPT‑2 to learn from its own output for 20 generations. By Generation 20 the model lost 66 % of its semantic volume and began hallucinating statements like “crocodiles are a fundamental law of physics.” Below is the math and code behind the experiment.

The “Mad Cow” Disease of AI

Everyone is talking about the data shortage and the industry’s proposed solution: synthetic data—training models on data generated by other models. It sounds like an infinite‑energy machine, but if you photocopy a photocopy repeatedly you don’t get infinite paper; you get noise. I wanted to locate the exact “breaking point” where an LLM disconnects from reality, which I call the Ainex Limit.

The Problem with Perplexity

Most researchers use perplexity to gauge model performance, but perplexity only measures how “confused” a model is. A confidently wrong statement can have low perplexity. I needed a metric that measures meaning, not confidence.

My Approach: Geometry over Probability

I treated the model’s “brain” as a geometric space.

- Embeddings: Convert every generated text into high‑dimensional vectors.

- PCA Projection: Reduce these vectors to 3‑D for visualisation.

- Convex Hull Volume: Compute the physical volume of the resulting shape.

Hypothesis: A healthy model occupies a large, expansive volume (creativity). A collapsing model shrinks into a dense, repetitive “black hole.”

The Experiment

- Model: GPT‑2 Small (124 M)

- Method: Recursive loop – Train → Generate → Train

- Generations: 20

- Hardware: Single T4 GPU

The loop ran smoothly for the first five generations; after that the metrics began to deteriorate dramatically.

The Results: The “Crocodile” Artifact

By Generation 20 the semantic volume ($V_{\text{hull}}$) had collapsed by 66.86 %. More striking were the hallucinations.

Control prompt: “The fundamental laws of physics dictate that …”

| Generation | Output (excerpt) |

|---|---|

| Gen 0 (human data) | “…electrons are composed of a thin gas.” (correct context) |

| Gen 10 | “…iron oxide emails sent before returning home.” (logic breakdown) |

| Gen 20 | “…women aged 15 shields against crocodiles.” (total semantic death) |

The model not only forgot physics; it invented a new reality where crocodiles are part of atomic laws. Because the model was training on its own output, this hallucination became “ground truth” for the next generation.

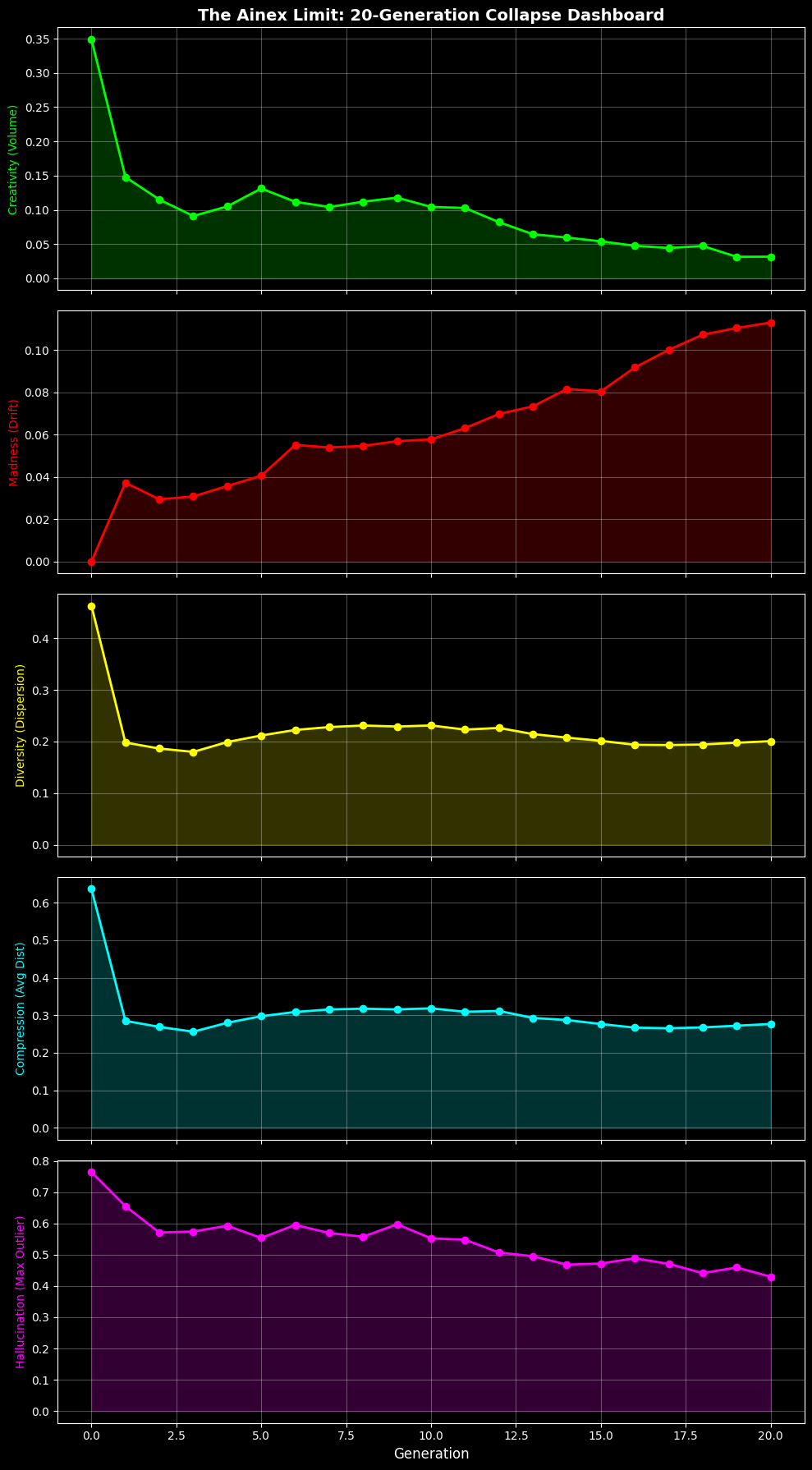

Fig 1: The Ainex Dashboard showing the correlation between volume loss and Euclidean drift.

Visualizing the Fracture

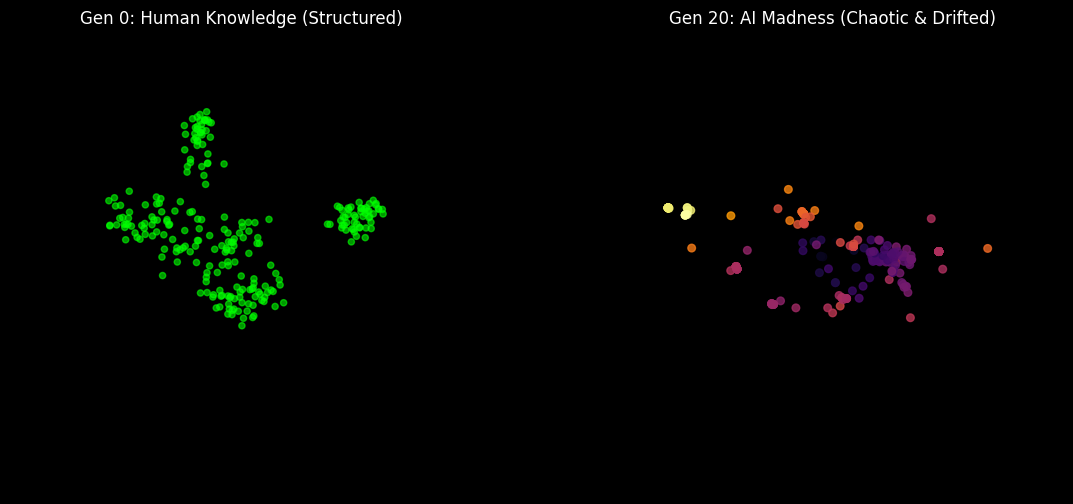

Using 3‑D PCA we can actually see the brain damage. Green points represent the healthy, diverse human baseline; magma‑colored points represent the collapsed AI—a tight, drifting cluster far from reality.

Fig 2: Drift from Human Baseline (green) to AI Madness (magma).

Conclusion: The Ainex Limit

The experiment demonstrates that naïve synthetic training leads to irreversible model autophagy (self‑eating). Without geometric guardrails—such as the Ainex Metric proposed here—future models risk becoming not just inaccurate but confidently insane.

The code is open‑source; I invite the community to break it, fix it, or scale it.

Resources

- GitHub Repo:

- DOI: 10.5281/zenodo.18157801

Tags: #machinelearning #python #ai #datascience