How NVIDIA H100 GPUs on CoreWeave’s AI Cloud Platform Delivered a Record-Breaking Graph500 Run

Source: NVIDIA AI Blog

Record‑breaking Graph500 Benchmark

The world’s top‑performing system for graph processing at scale was built on a commercially available cluster.

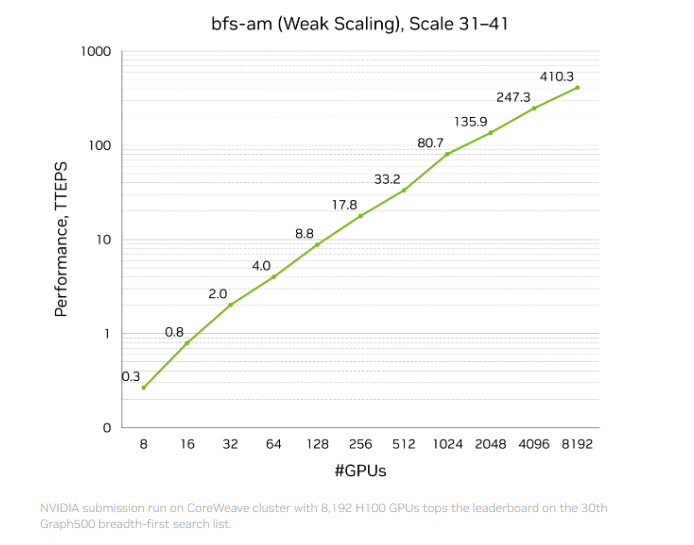

NVIDIA last month announced a record‑breaking benchmark result of 410 trillion traversed edges per second (TEPS), ranking No. 1 on the 31st Graph500 breadth‑first search (BFS) list.

The run was performed on an accelerated computing cluster hosted in a CoreWeave data center in Dallas, using 8,192 NVIDIA H100 GPUs to process a graph with 2.2 trillion vertices and 35 trillion edges. This performance is more than double that of comparable solutions on the list, including those hosted in national labs.

To put this in perspective, if every person on Earth had 150 friends, the resulting social‑graph would contain about 1.2 trillion edges. The NVIDIA‑CoreWeave system can search through every such relationship in roughly three milliseconds.

The breakthrough is not only raw speed but also efficiency. A comparable entry in the top‑10 runs used about 9,000 nodes, whereas the winning run used just over 1,000 nodes, delivering 3× better performance per dollar.

NVIDIA leveraged its full‑stack compute, networking, and software technologies—including the CUDA platform, Spectrum‑X networking, H100 GPUs, and a new active‑messaging library—to push performance while minimizing hardware footprint. This demonstrates how the NVIDIA computing platform can democratize acceleration of the world’s largest sparse, irregular workloads, as well as dense workloads like AI training.

How Graphs at Scale Work

Graphs are the underlying information structure for modern technology. They capture relationships between pieces of information in massive webs, from social networks to banking apps.

Example: In LinkedIn, a user’s profile is a vertex; connections to other users are edges. Some users have a handful of connections, others have tens of thousands, creating variable density that makes the graph sparse and irregular. Unlike images or language models, which are structured and dense, graphs are unpredictable.

The Graph500 BFS benchmark measures a system’s ability to navigate this irregularity at scale. BFS evaluates the speed of traversing every vertex and edge; a high TEPS score indicates superior interconnects, memory bandwidth, and software that can exploit the system’s capabilities. In essence, it measures how fast a system can “think” and associate disparate pieces of information.

Current Techniques for Processing Graphs

GPUs excel at accelerating dense workloads such as AI training, while the largest sparse linear‑algebra and graph workloads have traditionally been handled by CPUs.

When processing graphs, CPUs move data across compute nodes. As graphs scale to trillions of edges, this constant movement creates bottlenecks and communication jams.

Developers mitigate this with software techniques like active messages, which send small, aggregable messages that process graph data in place, improving network efficiency. However, active messaging was originally designed for CPUs and is limited by CPU throughput and compute capabilities.

Reengineering Graph Processing for the GPU

To accelerate the BFS run, NVIDIA engineered a full‑stack, GPU‑only solution that reimagines data movement across the network.

- A custom software framework built on InfiniBand GPUDirect Async (IBGDA) and the NVSHMEM parallel‑programming interface enables GPU‑to‑GPU active messages.

- With IBGDA, a GPU can communicate directly with the InfiniBand NIC, allowing hundreds of thousands of GPU threads to send active messages simultaneously—far beyond the few hundred threads possible on a CPU.

- Active messaging now runs entirely on GPUs, bypassing the CPU and fully exploiting the massive parallelism and memory bandwidth of NVIDIA H100 GPUs for sending, moving, and processing messages.

Running on the stable, high‑performance infrastructure of NVIDIA partner CoreWeave, this orchestration doubled the performance of comparable runs while using a fraction of the hardware and cost.

Accelerating New Workloads

The breakthrough has massive implications for high‑performance computing (HPC). Fields such as fluid dynamics, weather forecasting, and cybersecurity rely on sparse data structures and communication patterns similar to those in large graphs.

For decades, these domains have been tethered to CPUs at the largest scales, even as data grew from billions to trillions of edges. NVIDIA’s winning Graph500 result—and two other top‑10 entries—validate a new GPU‑centric approach for HPC at scale.

With NVIDIA’s full‑stack orchestration of computing, networking, and software, developers can now use technologies like NVSHMEM and IBGDA to efficiently scale their largest HPC applications, bringing supercomputing performance to commercially available infrastructure.

Stay up to date on the latest Graph500 benchmarks and learn more about NVIDIA networking technologies.