Building an AI Chatbot That Answers Questions Using Private Data (RAG Overview)

Source: Dev.to

Introduction

Most AI chatbots work well—until you ask them something specific.

Large language models don’t have access to your private documents or internal knowledge. When context is missing, they fill in the gaps by guessing, which leads to hallucinations and unreliable answers.

In this post we’ll walk through how to build an AI chatbot that answers questions using private data by applying Retrieval‑Augmented Generation (RAG), and explain why this approach is more reliable than prompt‑only chatbots.

Why Prompt‑Only Chatbots Break Down

- Out of the box, LLMs:

- Don’t know your internal or private data

- Can’t access up‑to‑date information

- Generate answers even when they’re uncertain

This becomes a real problem for:

- Internal tools

- Documentation assistants

- Customer support bots

- Knowledge‑based applications

Prompt engineering alone doesn’t fix this, because the model still lacks the necessary context.

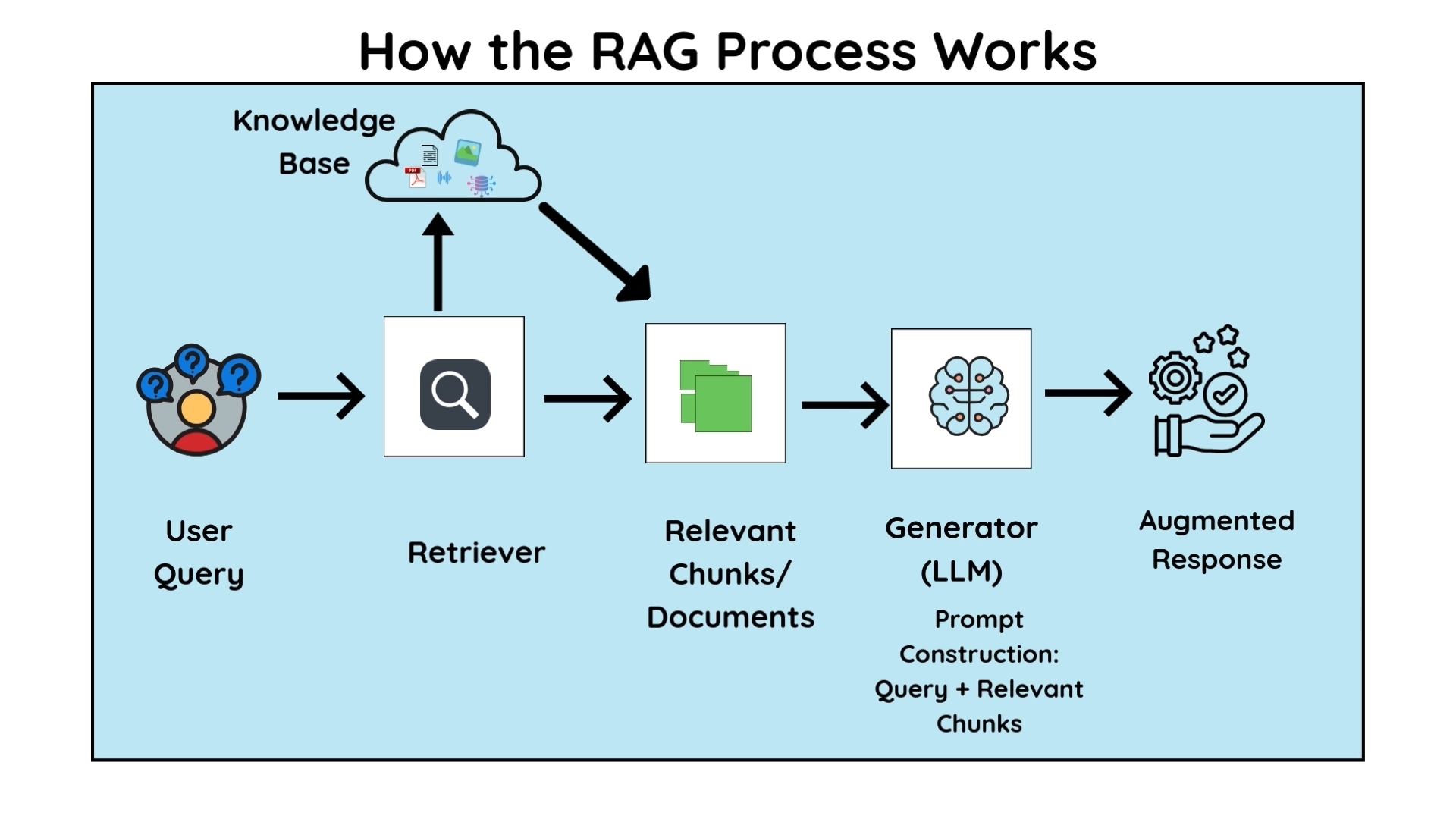

What Retrieval‑Augmented Generation (RAG) Actually Does

Retrieval‑Augmented Generation (RAG) changes how a chatbot answers questions.

- Retrieves relevant information from your data source

- Passes that information into the prompt

- Generates a response grounded in the retrieved context

A useful way to think about it:

- Prompt‑only chatbots take a closed‑book exam.

- RAG systems take an open‑book exam.

The result is more accurate and consistent responses.

High‑Level Architecture

A typical RAG chatbot includes:

- A user query

- A retrieval layer (search or vector similarity)

- Relevant document chunks

- An LLM that generates the final answer

Why this separation matters

- Retrieval handles accuracy

- The language model handles natural language generation

When RAG Is the Right Approach

RAG is a good fit when:

- The data is private or internal

- Accuracy is more important than creativity

- The knowledge base changes over time

Common use cases include:

- Internal documentation assistants

- Customer support chatbots

- Knowledge‑base search tools

- Personal document Q&A systems

Common RAG Mistakes

- Poor document chunking

- Weak retrieval configuration

- Passing too much context into the prompt

- Assuming a larger model will fix retrieval problems

In practice, retrieval quality matters more than model choice.

Full Walkthrough and Demo

The complete setup (including data retrieval and response generation) is demonstrated in the video below.

If your AI chatbot produces unreliable answers, the issue is usually missing context—not the model itself. Retrieving the right data before generating a response is what makes RAG‑based systems reliable.