What Are Recursive Language Models?

Source: Dev.to

Get Data Lakehouse Books

- Apache Iceberg: The Definitive Guide

- Apache Polaris: The Definitive Guide

- Architecting an Apache Iceberg Lakehouse

- The Apache Iceberg Digest: Vol. 1

Lakehouse Community

- Join the Data Lakehouse Community

- Data Lakehouse Blog Roll

- OSS Community Listings

- Dremio Lakehouse Developer Hub

Recursive Language Models (RLMs)

Recursive Language Models (RLMs) are language models that call themselves.

That sounds strange at first—but the idea is simple: instead of answering a question in one go, an RLM breaks the task into smaller parts, then asks itself those sub‑questions. It builds the answer step by step, using structured function calls along the way.

How RLMs differ from standard LLMs

A typical model tries to predict the full response directly from a prompt. If the task has multiple steps, it must manage them all in a single stream of text. This can work for short tasks, but it often falls apart when the model needs to remember intermediate results or reuse the same logic multiple times.

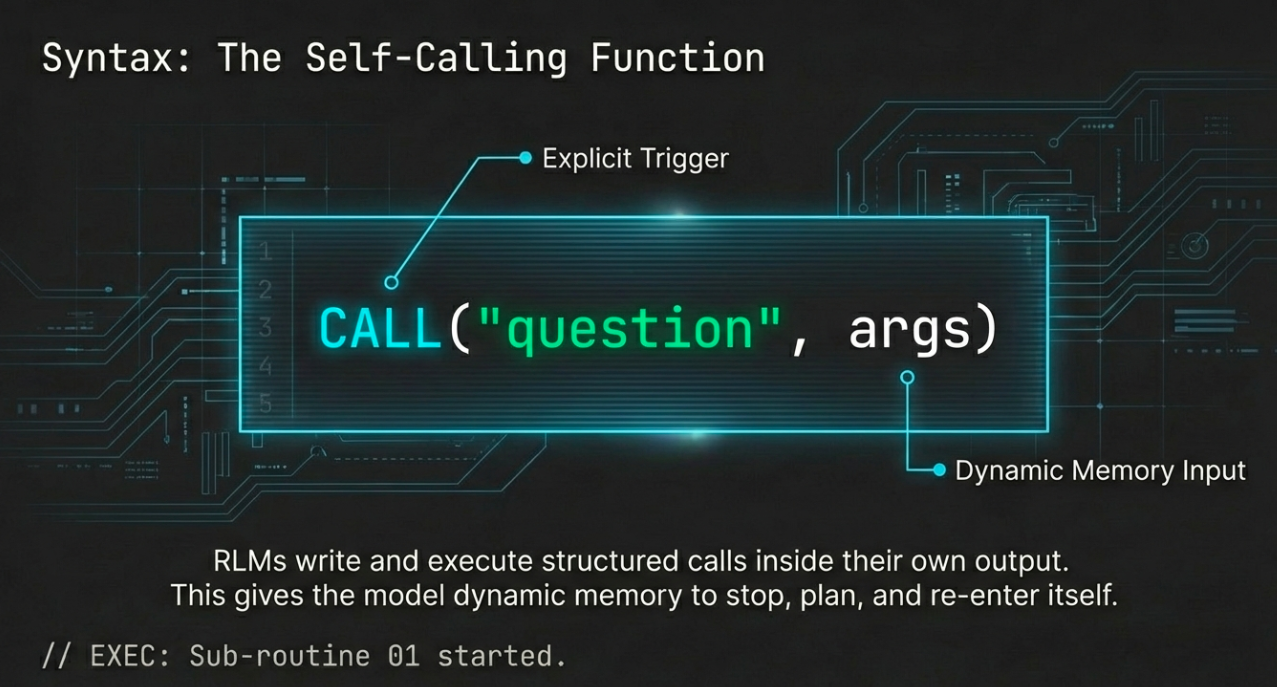

RLMs don’t try to do everything at once. They write and execute structured calls—like CALL("question", args)—inside their own output. The system sees this call, pauses the main response, evaluates the sub‑task, then inserts the result and continues. It’s a recursive loop: the model is both the planner and the executor.

This gives RLMs a kind of dynamic memory and control flow. They can:

- Stop, plan, and re‑enter themselves with new input

- Combine results from multiple sub‑tasks

- Reuse their own logic

That’s what makes them powerful—and fundamentally different from the static prompting methods most models use today.

Why standard LLMs struggle with multi‑step tasks

Language models are good at sounding smart. But when a task involves multiple steps that depend on each other, standard models often fail because they generate everything in a straight line.

- No built‑in way to pause or modularize the reasoning process

- No explicit structure—just one long stream of text

Prompt engineering (e.g., “think step‑by‑step” or “show your work”) can improve results, but it doesn’t change how the model actually runs. The model still generates everything in one session, with no built‑in mechanism to modularize or reuse logic.

What RLMs bring to the table

Recursive Language Models treat complex tasks as programs. The model doesn’t just answer—it writes code‑like calls to itself. Those calls are evaluated in real time, and their results are folded back into the response.

Benefits

- Reuse their own logic

- Focus on one part of the task at a time

- Scale to deeper or more recursive problems

In other words, RLMs solve the structure problem. They bring composability and control into language generation—two things that most LLMs still lack.

The core RLM loop

Generate → Detect → Call → Repeat

-

The model receives a prompt.

-

It starts generating a response.

-

When it hits a sub‑task, it emits a structured function call, e.g.:

CALL("Summarize", "text goes here") -

The system pauses, evaluates that call by feeding it back into the same model, and gets a result.

-

The result is inserted, and the original response resumes.

This process can happen once—or dozens of times inside a single response.

Concrete example

Suppose you ask an RLM to explain a complicated technical article. Instead of trying to summarize the whole thing at once, the model might:

- Break the article into sections.

- Issue recursive calls to summarize each section individually.

- Combine those pieces into a final answer.

What’s actually new?

- The model isn’t just generating text; it’s controlling execution.

- Each function call is explicit and machine‑readable, not hidden in plain text.

- The model learns when to delegate subtasks to itself, not just what to say.

This design introduces modular reasoning—closer to programming than prompting. It enables RLMs to solve longer, deeper, and more compositional tasks than traditional LLMs.

RLMs vs. Reasoning Models

It’s easy to confuse Recursive Language Models with models designed for reasoning. Both aim to solve harder, multi‑step problems, but they take very different paths.

| Aspect | Reasoning Models | Recursive Language Models |

|---|---|---|

| Goal | “Think better” within a fixed response | Change how the model runs |

| Mechanism | Prompt tricks (“let’s think step‑by‑step”), fine‑tuning, architectural tweaks | Structured function calls, real control flow, recursion |

| Execution | Generates full output in one go (flat, linear) | Can pause, emit a sub‑call, re‑enter, and build results incrementally |

| Transparency | Hard to inspect internal steps | Each recursive call is explicit; you can see the full tree of operations |

| Analogy | Writing a better essay | Writing and running a program |

Reasoning models try to write better essays. RLMs write and run programs.

Because RLMs expose every sub‑call, they are easier to inspect and debug. You can see exactly what the model asked, what it answered, and how it combined the results—a level of transparency that is rare in typical LLM workflows.

The Bigger Picture

- Reasoning models stretch the limits of static prompting.

- Recursive Language Models redefine what a model can do at runtime.

Recursion isn’t just a technical upgrade—it’s a shift in what language models are capable of. With recursion, models don’t have to guess the whole answer in one pass. They can build it piece by piece, reusing their own capabilities as needed. This unlocks new behaviors that standard models struggle with.

Recursive Language Models (RLMs) – Why They Matter

How recursion helps different tasks

- Logic puzzles – Instead of brute‑forcing a full solution, an RLM can write out each rule, evaluate sub‑cases, and combine the results.

- Math word problems – The model breaks a complex problem into steps, solves each one recursively, and verifies intermediate answers.

- Code generation – RLMs can draft a function, then call themselves to write test cases, fix bugs, or generate helper functions.

- Proof generation – For theorem proving, recursion lets the model build a proof tree, checking smaller lemmas along the way.

Experiment results: In the paper’s experiments, RLMs outperformed non‑recursive baselines on multi‑step benchmarks and were also more efficient. Recursive calls reduced total token usage because the model could reuse logic instead of repeating it.

Beyond accuracy – efficiency and composability

- Recursion isn’t just about higher accuracy; it also improves efficiency and composability.

- Rather than scaling linearly with problem size, RLMs can scale logarithmically by solving smaller pieces and reusing solutions.

- This makes them a better fit for tasks where reasoning depth grows quickly – exactly the kind of problems LLMs are beginning to face in real‑world applications.

The current LLM landscape

Most language models still follow a simple pattern:

Input → Output

This works for quick answers or lightweight tasks, but it falls short for anything complex.

Developers today build agents, chains, and tool‑using systems on top of LLMs. These wrappers simulate structure, yet they’re often fragile because they rely on:

- Prompt hacking

- Regex parsing

- External orchestration

Why RLMs offer a cleaner path

- Fewer moving parts – No need for external chains or custom routing logic; the model decides when and how to branch.

- Greater transparency – Each recursive call is visible and traceable, allowing step‑by‑step auditing.

- Better generalization – Once trained to use recursion, the model can apply it flexibly across domains (math, code, reasoning, planning, etc.).

Looking ahead

- RLMs are still early, but they hint at a broader shift: treating models not just as generators, but as runtime environments.

- This opens the door to future systems where models can plan, act, and adapt on their own, with clear structure behind every step.

If the last few years were about making LLMs sound smart, the next few might be about making them think with structure.

The core idea

Recursive Language Models aren’t merely a tweak to existing LLMs; they represent a shift in how models operate.

- Instead of treating every task as a one‑shot prediction, RLMs break problems into parts, solve them recursively, and combine the results.

- This gives them something most language models still lack: structure.

Why structure matters

- Makes models more reliable on complex tasks.

- Makes reasoning easier to follow.

- Enables new capabilities—planning, verification, adaptation—without needing complex external systems.

Bottom line

RLMs don’t just produce better answers; they enable better models—fluent, recursive, modular, and built to handle depth.