NVIDIA DGX SuperPOD Sets the Stage for Rubin-Based Systems

Source: NVIDIA AI Blog

Rubin Platform Overview

At CES in Las Vegas, NVIDIA introduced the Rubin platform, a family of six new chips designed to deliver a single, powerful AI supercomputer. The platform is engineered to accelerate:

- Agentic AI

- Mixture‑of‑Experts (MoE) models

- Long‑context reasoning

Core Components

| Component | Description |

|---|---|

| NVIDIA Vera CPU | High‑performance CPU for AI workloads. |

| Rubin GPU | Next‑gen GPU optimized for training and inference. |

| NVLink 6 Switch | High‑bandwidth interconnect for chip‑to‑chip communication. |

| ConnectX‑9 SuperNIC | Advanced networking interface. |

| BlueField‑4 DPU | Data‑processing unit for offloading and security. |

| Spectrum‑6 Ethernet Switch | Scalable Ethernet fabric for data‑center connectivity. |

These six chips are tightly integrated through an advanced codesign approach, which:

- Accelerates model training

- Reduces the cost of inference token generation

DGX SuperPOD

The DGX SuperPOD remains the foundational design for deploying Rubin‑based systems across enterprise and research environments.

NVIDIA DGX Platform

The NVIDIA DGX platform delivers a complete technology stack—compute, networking, and software—as a single, cohesive system. This eliminates the burden of infrastructure integration, letting teams focus on:

- AI innovation

- Business outcomes

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof.” — Jensen Huang, Founder & CEO, NVIDIA

References

New Platform for the AI Industrial Revolution

The Rubin platform powers the latest DGX systems and introduces five major technology advancements that deliver a step‑function increase in intelligence and efficiency.

| # | Advancement | Key Specs & Benefits |

|---|---|---|

| 1 | Sixth‑Generation NVIDIA NVLink | • 3.6 TB/s per GPU • 260 TB/s per Vera Rubin NVL72 rack • Enables massive MoE and long‑context workloads |

| 2 | NVIDIA Vera CPU | • 88 custom Olympus cores (Arm v9.2 compatible) • Ultra‑fast NVLink‑C2C connectivity • Provides industry‑leading, efficient AI‑factory compute |

| 3 | NVIDIA Rubin GPU | • 50 PFLOPS of NVFP4 compute for AI inference • Third‑generation Transformer Engine with hardware‑accelerated compression |

| 4 | Third‑Generation NVIDIA Confidential Computing | • First rack‑scale platform delivering end‑to‑end confidentiality across CPU, GPU, and NVLink domains |

| 5 | Second‑Generation RAS Engine | • Real‑time health monitoring, fault tolerance, and proactive maintenance across GPU, CPU, and NVLink • Modular, cable‑free trays enable 3× faster servicing |

Impact: These innovations together achieve up to a 10× reduction in inference token cost compared with the previous generation—a critical milestone as AI models continue to grow in size, context, and reasoning depth.

DGX SuperPOD: The Blueprint for NVIDIA Rubin Scale‑Out

The Rubin‑based DGX SuperPOD combines the latest NVIDIA hardware and software to deliver a unified, rack‑scale AI engine.

Core Components

| Component | Role |

|---|---|

| NVIDIA DGX Vera Rubin NVL72 or DGX Rubin NVL8 systems | Compute nodes (Rubin GPUs, CPUs, memory) |

| NVIDIA BlueField‑4 DPUs | Secure, software‑defined infrastructure |

| NVIDIA Inference Context Memory Storage Platform | Low‑latency inference data store |

| NVIDIA ConnectX‑9 SuperNICs | High‑performance networking |

| NVIDIA Quantum‑X800 InfiniBand & NVIDIA Spectrum‑X Ethernet | Fabric for ultra‑fast inter‑node communication |

| NVIDIA Mission Control | Automated AI‑infrastructure orchestration & operations |

DGX SuperPOD with DGX Vera Rubin NVL72

- Configuration: 8 × DGX Vera Rubin NVL72

- GPU count: 576 Rubin GPUs (72 GPU per node)

- Performance: 28.8 exaflops FP4

- Memory: 600 TB of fast memory (75 TB per node)

- CPU/DPU mix: 36 Vera CPUs + 18 BlueField‑4 DPUs per node

- Interconnect: 260 TB/s aggregate NVLink throughput → eliminates model partitioning, enabling the rack to act as a single coherent AI engine

Key benefit: Unified memory‑compute space across the entire rack, delivering unprecedented throughput for large‑scale training and inference workloads.

DGX SuperPOD with DGX Rubin NVL8

- Configuration: 64 × DGX Rubin NVL8

- GPU count: 512 Rubin GPUs (8 GPU per node)

- Performance per node: 5.5× NVFP4 FLOPS vs. NVIDIA Blackwell systems (thanks to 8 Rubin GPUs + 6th‑gen NVLink)

- Form factor: Liquid‑cooled, x86‑CPU‑based chassis – an efficient on‑ramp for any AI project from development to deployment

Key benefit: Scalable, energy‑efficient entry point to the Rubin era for organizations of all sizes.

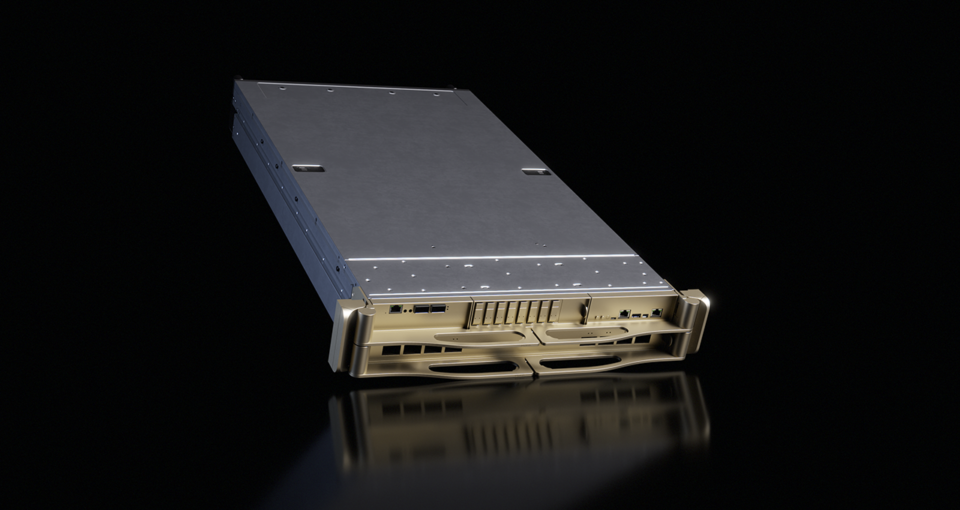

Visual Overview

All specifications are based on NVIDIA’s publicly released data as of January 2026.

Next‑Generation Networking for AI Factories

The Rubin platform redefines the data center as a high‑performance AI factory with revolutionary networking built around:

- NVIDIA Spectrum‑6 Ethernet switches

- NVIDIA Quantum‑X800 InfiniBand switches

- NVIDIA BlueField‑4 DPUs

- NVIDIA ConnectX‑9 SuperNICs

These components are integrated into the NVIDIA DGX SuperPOD to eliminate traditional bottlenecks—scale, congestion, and reliability—while sustaining the world’s most massive AI workloads.

Optimized Connectivity for Massive‑Scale Clusters

The 800 Gb/s end‑to‑end networking suite offers two purpose‑built paths for AI infrastructure, ensuring peak efficiency whether you use InfiniBand or Ethernet.

| Path | Key Features | Primary Use |

|---|---|---|

| NVIDIA Quantum‑X800 InfiniBand | • Lowest‑latency, highest‑performance networking for AI clusters • Scalable Hierarchical Aggregation and Reduction Protocol (SHARP v4) • Adaptive routing that offloads collective operations to the fabric | Dedicated AI clusters that demand ultra‑low latency |

| NVIDIA Spectrum‑6 Ethernet | • Built on Spectrum‑6 switches and ConnectX‑9 SuperNICs • Predictable, high‑performance scale‑out and scale‑across connectivity • Optimized for “east‑west” traffic patterns typical of AI workloads | AI factories that rely on standard Ethernet protocols |

Engineering the Gigawatt AI Factory

These innovations represent an extreme code‑design with the Rubin platform. By mastering congestion control and performance isolation, NVIDIA is paving the way for the next wave of gigawatt AI factories. This holistic approach ensures that as AI models grow in complexity, the networking fabric remains a catalyst for speed rather than a constraint.

NVIDIA Software Advances AI Factory Operations and Deployments

NVIDIA Mission Control

What it is – AI‑driven data‑center operation and orchestration software for NVIDIA Blackwell‑based DGX systems (also available for Rubin‑based DGX systems).

Key capabilities

- Automates deployment configuration and integration with facility systems.

- Manages clusters, workloads, and resource scheduling.

Infrastructure benefits

- Enhanced control of cooling and power events for NVIDIA Rubin.

- Improved resiliency and faster response to incidents (e.g., rapid leak detection).

- Access to NVIDIA’s latest efficiency innovations.

- Autonomous recovery to maximize AI‑factory productivity.

Read more about NVIDIA Mission Control →

NVIDIA AI Enterprise Platform

Supported on – All NVIDIA DGX systems.

Includes

- NVIDIA NIM – a suite of generative‑AI micro‑services for developers.

- Pre‑trained open models such as the NVIDIA Nemotron‑3 family.

- Associated data, libraries, and tools for rapid AI development.

Explore NVIDIA AI Enterprise →

NVIDIA NIM micro‑services details →

By integrating intelligent, end‑to‑end software with its DGX hardware, NVIDIA enables enterprises to run AI factories that are more efficient, resilient, and easier to manage.

DGX SuperPOD: The Road Ahead for Industrial AI

DGX SuperPOD has long served as the blueprint for large‑scale AI infrastructure. The arrival of the Rubin platform will become the launchpad for a new generation of AI factories—systems designed to reason across thousands of steps and deliver intelligence at dramatically lower cost, helping organizations build the next wave of frontier models, multimodal systems, and agentic AI applications.

Availability

NVIDIA DGX SuperPOD with DGX Vera Rubin NVL72 or DGX Rubin NVL8 systems will be available in the second half of this year.

See the notice regarding software product information.