LLM Year in Review

Source: Hacker News

19 Dec, 2025

1. Reinforcement Learning from Verifiable Rewards (RLVR)

At the start of 2025, the LLM production stack in all labs looked something like this:

- Pretraining (GPT‑2/3 of ~2020)

- Supervised Finetuning (InstructGPT ~2022)

- Reinforcement Learning from Human Feedback (RLHF ~2022)

This was the stable and proven recipe for training a production‑grade LLM for a while. In 2025, Reinforcement Learning from Verifiable Rewards (RLVR) emerged as the de‑facto new major stage to add to this mix. By training LLMs against automatically verifiable rewards across a number of environments (e.g., math/code puzzles), the models spontaneously develop strategies that look like “reasoning” to humans—they learn to break down problem solving into intermediate calculations and to iterate back‑and‑forth to figure things out (see the DeepSeek R1 paper for examples).

These strategies would have been very difficult to achieve in the previous paradigms because it wasn’t clear what the optimal reasoning traces and recoveries look like for the LLM; the model has to discover what works for it via optimization against rewards.

Unlike the SFT and RLHF stages, which are relatively thin/short (minor finetunes computationally), RLVR involves training against objective (non‑gameable) reward functions, allowing for much longer optimization. Running RLVR turned out to offer high capability / $ efficiency, which gobbled up the compute originally intended for pretraining. Consequently, most of the capability progress of 2025 was defined by labs chewing through the overhang of this new stage; we saw similarly sized LLMs but with far longer RL runs.

A unique knob (and associated scaling law) also appeared: capability as a function of test‑time compute can be controlled by generating longer reasoning traces and increasing “thinking time.” OpenAI o1 (late 2024) was the first demonstration of an RLVR model, but the o3 release (early 2025) was the obvious inflection point where the difference became intuitively palpable.

2. Ghosts vs. Animals / Jagged Intelligence

2025 is where I (and, I think, the rest of the industry) first started to internalize the “shape” of LLM intelligence in a more intuitive sense. We’re not “evolving/growing animals”; we are “summoning ghosts.” Everything about the LLM stack is different (neural architecture, training data, training algorithms, and especially optimization pressure), so it should be no surprise that we are getting very different entities in the intelligence space—entities that are inappropriate to think about through an animal lens.

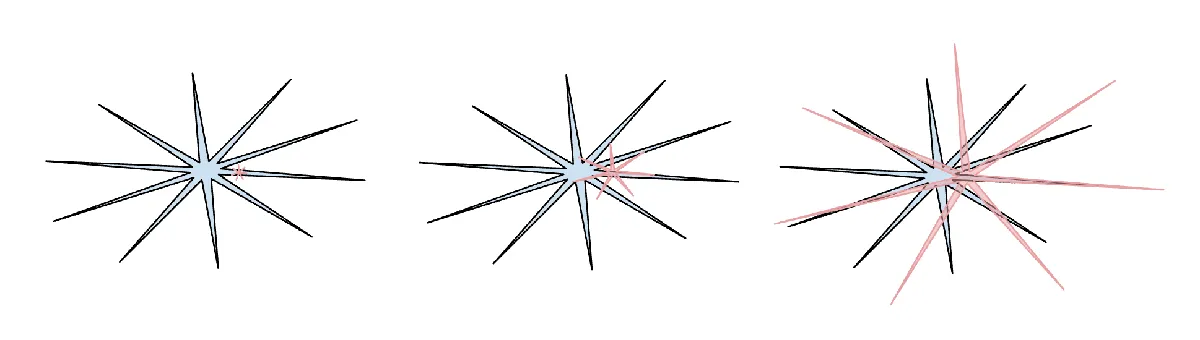

Supervision‑wise, human neural nets are optimized for survival of a tribe in the jungle, but LLM neural nets are optimized for imitating humanity’s text, collecting rewards in math puzzles, and getting that up‑vote from a human on the LM Arena. As verifiable domains allow for RLVR, LLMs “spike” in capability in the vicinity of these domains and overall display amusingly jagged performance characteristics—they are at the same time a genius polymath and a confused, cognitively challenged grade‑schooler, seconds away from being tricked by a jailbreak to exfiltrate your data.

Related to all this is my general apathy and loss of trust in benchmarks in 2025. The core issue is that benchmarks are, by construction, verifiable environments and are therefore immediately susceptible to RLVR—and weaker forms of it—via synthetic data generation. In the typical bench‑maxxing process, teams in LLM labs inevitably construct environments adjacent to little pockets of the embedding space occupied by benchmarks and grow “jaggies” to cover them. Training on the test set has become a new art form.

What does it look like to crush all the benchmarks but still not get AGI?

I have written a lot more on the topic of this section here:

3. Cursor / New Layer of LLM Apps

What I find most notable about Cursor (other than its meteoric rise this year) is that it convincingly revealed a new layer of an “LLM app”—people started to talk about “Cursor for X.” As I highlighted in my Y Combinator talk this year (transcript and video), LLM apps like Cursor bundle and orchestrate LLM calls for specific verticals:

- They handle the context engineering.

- They orchestrate multiple LLM calls under the hood, strung into increasingly complex DAGs, carefully balancing performance and cost trade‑offs.

- They provide an application‑specific GUI for the human in the loop.

- They offer an “autonomy slider.”

A lot of chatter has been spent in 2025 on how “thick” this new app layer is. Will the LLM labs capture all applications, or are there green pastures for LLM apps? Personally I suspect that LLM labs will tend to graduate the generally capable “college student,” while LLM apps will organize, finetune, and actually animate teams of them into deployed professionals in specific verticals.

4. Claude Code / AI That Lives on Your Computer

Claude Code (CC) was the first convincing demonstration of an LLM Agent—a system that, in a loopy way, strings together tool use and reasoning for extended problem solving.

Why CC Matters

- Runs locally. It operates on your computer, using your private environment, data, and context.

- Local‑first design. Unlike OpenAI’s cloud‑centric Codex/agent approach (which orchestrates containers from ChatGPT), CC embraces a “run‑on‑your‑machine” model.

- Intermediate, slow‑takeoff world. In a landscape of jagged capabilities, it often makes more sense to run agents hand‑in‑hand with developers and their specific setups rather than relying on large, cloud‑based swarms that feel like the “AGI end‑game.”

What Sets CC Apart

CC got the order of precedence right and packaged it into a beautiful, minimal, and compelling CLI form factor. This shifted the perception of AI from “a website you visit like Google” to a little spirit/ghost that lives on your computer—a distinct new paradigm of interaction.

5. Vibe Coding

2025 is the year AI crossed a capability threshold necessary to build all kinds of impressive programs simply via English, forgetting that the code even exists. Amusingly, I coined the term “vibe coding” in this shower‑of‑thoughts tweet, totally oblivious to how far it would go.

With vibe coding, programming is not strictly reserved for highly trained professionals; it is something anyone can do. In this capacity, it is yet another example of what I wrote about in Power to the people: How LLMs flip the script on technology diffusion, namely that, in sharp contrast to all other technology so far, regular people benefit far more from LLMs than professionals, corporations, and governments.

But vibe coding does more than empower regular people to approach programming – it also enables trained professionals to write a lot more (vibe‑coded) software that would otherwise never be written.

- In nanochat, I vibe‑coded my own custom, highly efficient BPE tokenizer in Rust instead of adopting existing libraries or learning Rust at that level.

- I vibe‑coded many projects this year as quick‑app demos of things I wanted to exist, e.g.:

- I even vibe‑coded entire ephemeral apps just to find a single bug – why not? Code is suddenly free, ephemeral, malleable, and discardable after a single use.

Vibe coding will terraform software and alter job descriptions.

6. Nano Banana / LLM GUI

Google Gemini Nano banana is one of the most incredible, paradigm‑shifting models of 2025. In my worldview, LLMs are the next major computing paradigm, similar to the personal computers of the 1970s‑80s. Consequently, we will see analogous innovations for fundamentally similar reasons: personal computing, micro‑controllers (cognitive cores), the “internet” of agents, etc.

UI/UX Perspective

- “Chatting” with LLMs today is a bit like issuing commands to a 1980s computer console.

- Text is the raw/favored data representation for computers (and LLMs), but it is not the favored format for people, especially for input.

- People dislike reading large blocks of text – it is slow and effortful. Instead, they love to consume information visually and spatially, which is why GUIs were invented in traditional computing.

In the same way, LLMs should speak to us in our favored formats – images, infographics, slides, whiteboards, animations/videos, web apps, etc. Early and present versions of this are things like emoji and Markdown, which “dress up” text with titles, bold, italics, lists, tables, and so on.

Who will build the LLM GUI? In this worldview, Nano banana is a first early hint of what that might look like. Importantly, it’s not just about image generation; it’s about the joint capability that comes from text generation, image generation, and world knowledge, all tangled up in the model weights.

TL;DR

2025 was an exciting and mildly surprising year for LLMs. They are emerging as a new kind of intelligence—simultaneously a lot smarter and a lot dumber than I expected. In any case, they are extremely useful, and I don’t think the industry has realized even 10 % of their potential at present capability.

There are so many ideas to try, and conceptually the field feels wide open. As I mentioned on my Dwarkesh pod earlier this year, I simultaneously (and paradoxically) believe we will see rapid, continued progress and that there is still a lot of work to be done.

Strap in.