JuiceFS+MinIO: Ariste AI Achieved 3x Faster I/O and Cut Storage Costs by 40%+

Source: Dev.to

Storage challenges in quantitative investment: balancing scale, speed, and collaboration

The quantitative investment process sequentially includes the data layer, factor and signal layer, strategy and position layer, and execution and trading layer, forming a closed loop from data acquisition to trade execution.

Key challenges

- Data scale and growth rate – Historical market data, news data, and self‑calculated factor data total close to 500 TB, with hundreds of gigabytes added daily. Traditional disks cannot meet this demand.

- High‑frequency access and low‑latency requirements – Low‑latency reads directly affect research efficiency; slower reads lead to inefficient research.

- Multi‑team parallelism and data management – Independent, secure isolation is needed to avoid data confusion and leakage when multiple teams run experiments simultaneously.

Desired capabilities

- High performance – Single‑node read/write bandwidth > 500 MB/s; access latency below the local‑disk perception threshold.

- Easy scalability – On‑demand horizontal scaling of storage and compute without application changes.

- Management capability – Fine‑grained permission control, operation auditing, and data‑lifecycle policies in a single‑pane view.

Evolution of the storage architecture

Stage 1: Local disk

- Used the QuantraByte research framework with a built‑in ETF module storing data directly on local disks.

- Pros: Fast read speeds, quick iteration.

- Cons:

- Redundant downloads across researchers.

- Limited capacity (~15 TB) insufficient for growth.

- Collaboration difficulties when reusing others’ results.

Stage 2: MinIO centralized management

- Centralized all data on MinIO; a separate module handled data ingestion.

- Benefits: Unified public‑data downloads, permission isolation for multi‑team sharing, better storage utilization.

- New bottlenecks:

- High latency for high‑frequency random reads.

- No caching in MinIO Community Edition, leading to slow reads/writes of high‑frequency data.

Stage 3: Introducing JuiceFS for cache acceleration

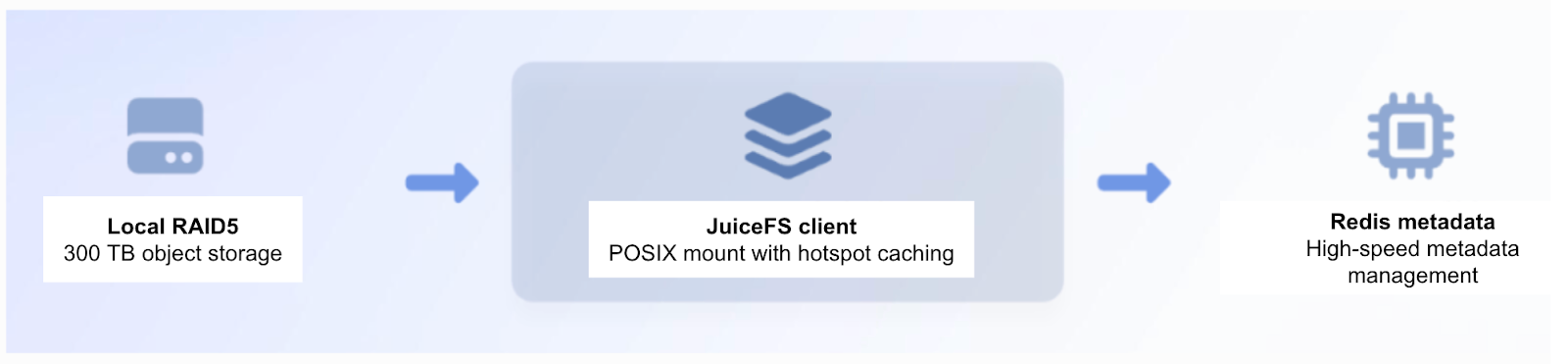

- Deployed JuiceFS cache acceleration with client‑side local RAID5 storage.

- Result: Read/write performance improved by ~3×, greatly enhancing high‑frequency shared‑data access.

- As data volume surpassed 300 TB, scaling local RAID5 storage became slow, risky, and required manual reconfiguration.

Stage 4: JuiceFS + MinIO cluster

- Adopted a clustered architecture combining JuiceFS and MinIO.

- Advantages:

- Sustained high performance: JuiceFS cache meets high‑frequency access demands.

- Easy cluster scaling: Add identical disks to increase capacity flexibly, enabling rapid horizontal scaling.

Through this four‑stage evolution, we validated the feasibility of an integrated solution that combines cache acceleration, elastic object storage, and POSIX compatibility for quantitative workloads. The approach offers a replicable, implementable best‑practice template that balances performance, cost, and management.

Performance and cost benefits

- The JuiceFS + MinIO architecture dramatically improved system bandwidth and resource‑utilization efficiency, fully meeting research application performance requirements.

- After adding the JuiceFS cache layer, backtesting efficiency surged: testing 100 million tick‑data entries dropped from hours to tens of minutes.

- Overall storage costs were reduced by ≈ 40 %, while I/O throughput increased by ≈ 3×.