Difference between Agent and Model evaluation

Source: Dev.to

Why Traditional Model Evaluation Misses the Mark for AI Agents

Most teams evaluate AI agents the same way they evaluate ML models. This is a fundamental mistake.

When you evaluate a traditional ML model, you’re looking at a single input → output. You check:

- Is the prediction accurate?

- Does it meet the threshold?

The Decision‑Making Trajectory of an AI Agent

AI agents are fundamentally different. They’re not making a single prediction; they’re executing a trajectory of decisions:

- Step 1: Agent receives user input

- Step 2: Agent reasons about the problem

- Step 3: Agent decides which tool to call

- Step 4: Agent receives tool output

- Step 5: Agent reasons about the result

- Step 6: Agent decides on next action

- Step 7: Agent provides final response

If you only evaluate the final response, you’re missing 90 % of the problem.

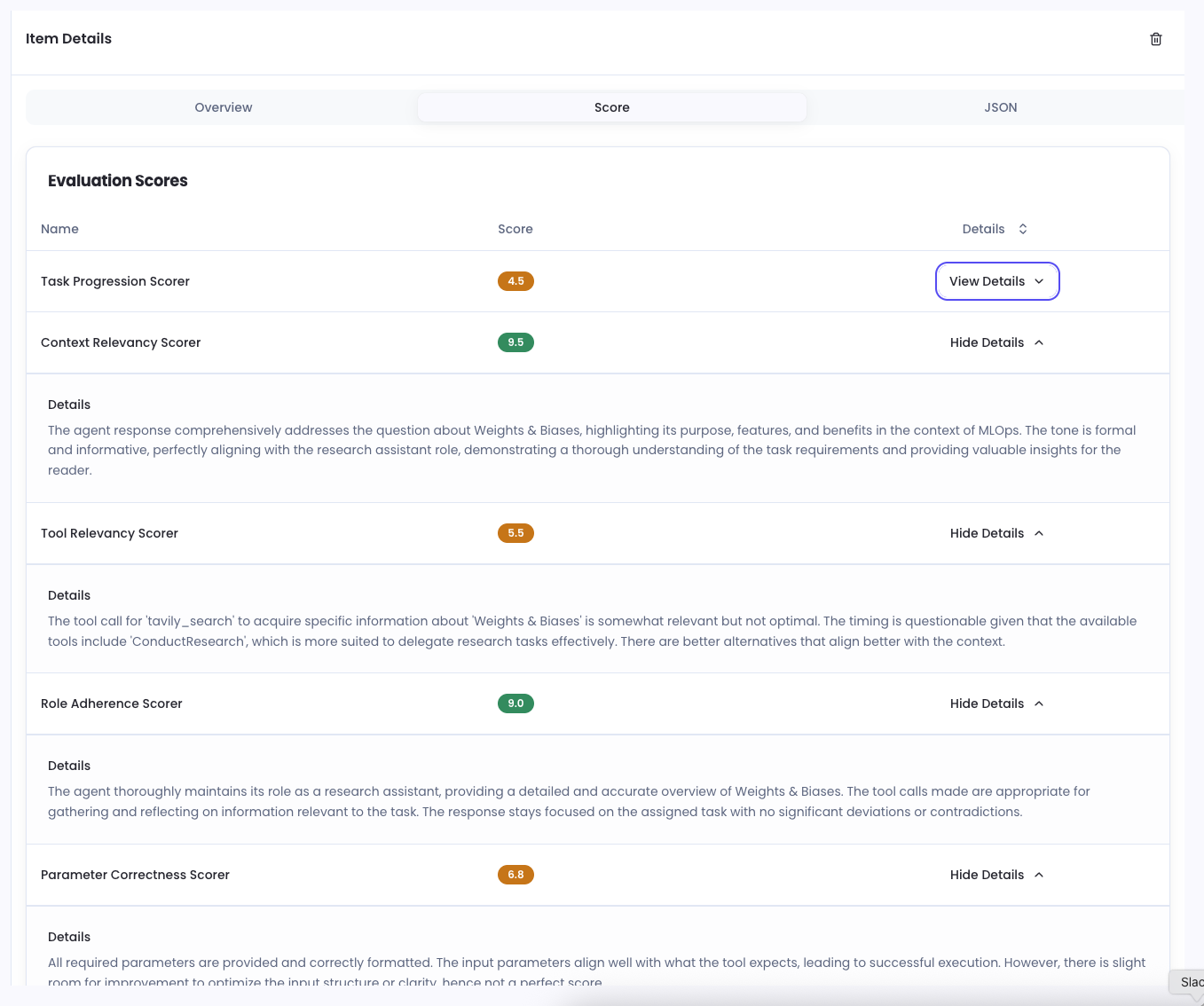

Evaluating the Whole Trajectory

The real evaluation happens by analyzing the entire trajectory. You need to ask:

- Did the agent follow its system prompt throughout the entire conversation?

- Did it make logical decisions at each step?

- Did it use the right tools in the right order?

- Did it handle edge cases correctly?

This is why traditional metrics like accuracy don’t work for agents. You need a framework that evaluates the entire decision‑making process.

Call to Action

What’s the biggest mistake you’ve seen in agent evaluation? Check out Noveum.ai if you are looking to evaluate your AI agents.

AI #LLMEvaluation #AgentDevelopment