Building an 'Unstoppable' Serverless Payment System on AWS (Circuit Breaker Pattern)

Source: Dev.to

What happens when your payment gateway goes down? In a traditional app, the user sees a spinner, then a “500 Server Error,” and you lose the sale.

I wanted to build a system that refuses to crash. Even if the backend database is on fire, the user’s order should be accepted, queued, and processed automatically when the system heals.

Tech Stack

- Frontend: Python (Streamlit) – Store & Admin Dashboard.

- Orchestration: AWS Step Functions – The “Brain” handling the logic.

- Compute: AWS Lambda (Java 11) – The “Worker” handling business logic.

- State Store: Amazon DynamoDB – Stores circuit status (Open/Closed) and order history.

- Resiliency: Amazon SQS – The “Parking Lot” for failed orders.

- Observability: Grafana Cloud (Loki) – Log aggregation.

- Infrastructure: Terraform – Complete IaC.

Note: Use Terraform to manage resources. Best practice is to keep all resources in separate files for creation, deletion, or any kind of update.

Problem: Cascading Failures

In microservices, if Service A calls Service B and Service B hangs, Service A eventually hangs too. A surge of “Pay” clicks can hammer the database with retries, effectively DDoS‑ing yourself.

Solution: A Circuit Breaker – similar to a household breaker that trips during a surge to protect the system.

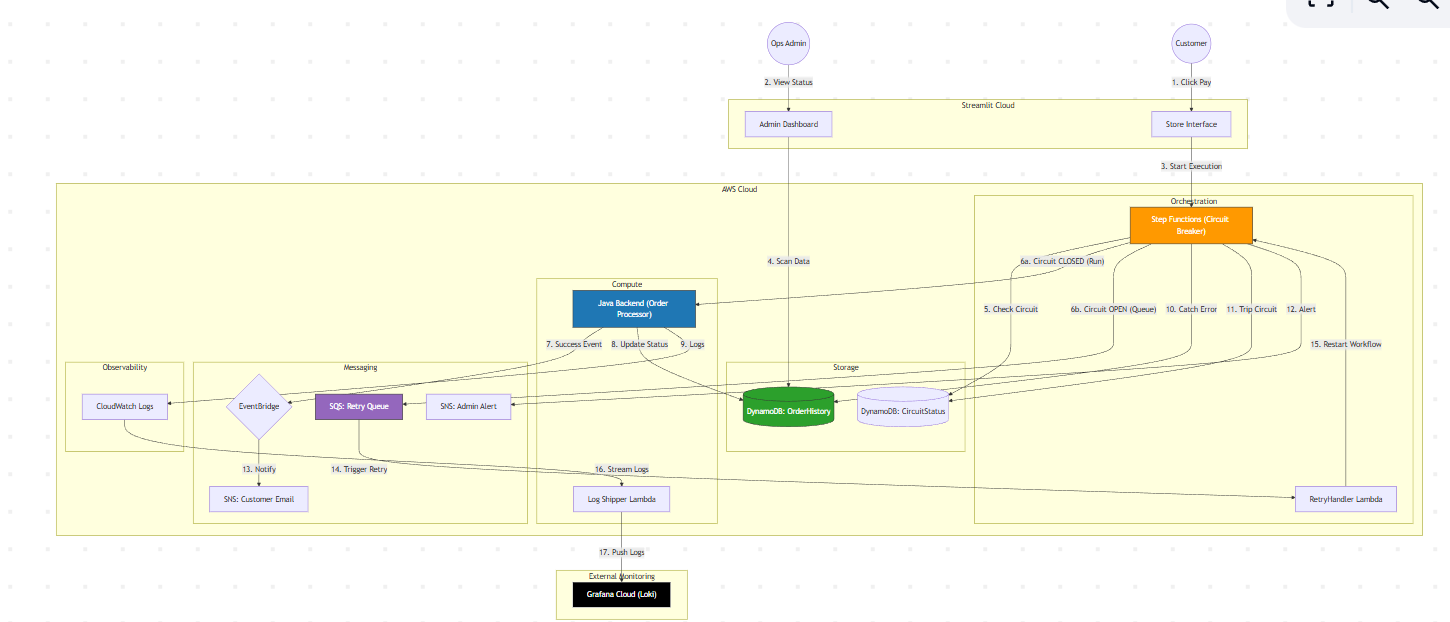

High‑Level Architecture

The system handles three distinct states:

| Path | Description |

|---|---|

| Green (Closed) | Backend is healthy; orders process immediately. |

| Red (Open) | Backend is crashing; the circuit trips and traffic stops reaching the backend. |

| Yellow (Recovery) | Orders are routed to an SQS queue to be retried later automatically. |

Logic Flow

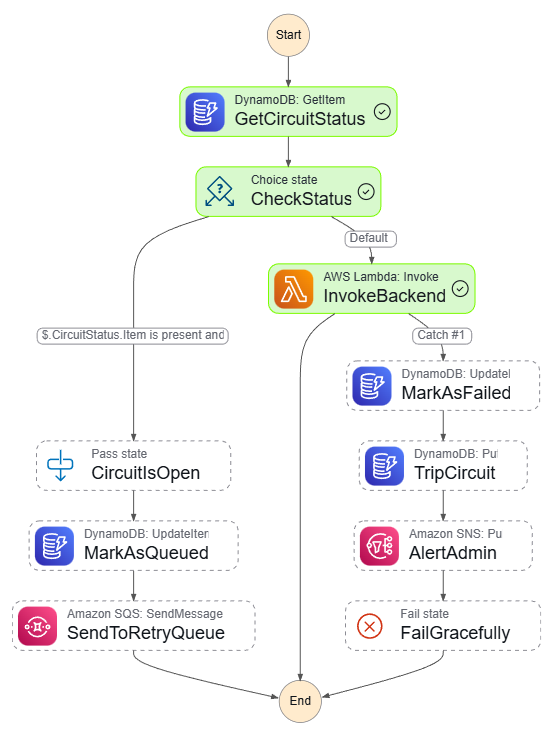

The core is an AWS Step Functions state machine acting as a traffic controller.

-

The Check – On each “Pay” click, the workflow checks DynamoDB for the circuit status.

- If OPEN, it skips the backend.

- If CLOSED, it proceeds to the Java Lambda.

-

The Execution – The Lambda processes the payment.

- Success: Updates order history to COMPLETED and emits an EventBridge event (triggers a customer email via SNS).

- Failure: Catches the error and retries with exponential back‑off (wait 1 s, then 2 s).

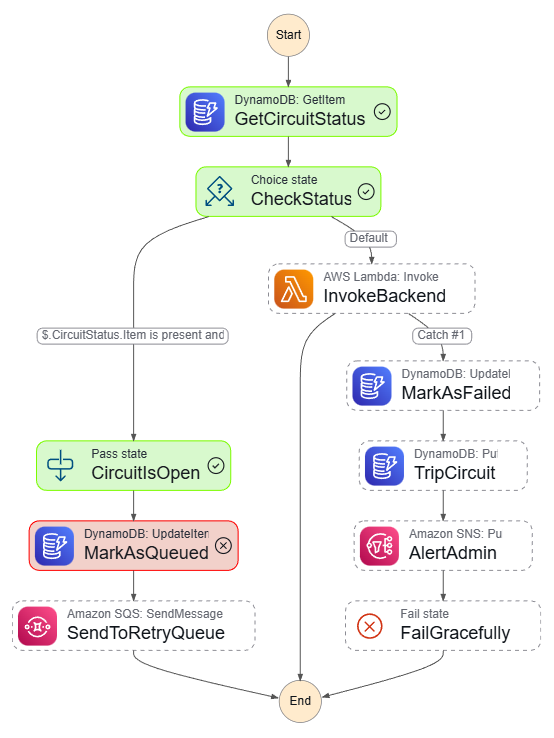

-

The “Trip” – If the backend fails repeatedly, the state machine:

- Writes status OPEN to DynamoDB.

- Alerts the sysadmin via SNS (“Critical: Circuit Tripped”).

- Marks the order as FAILED in the dashboard.

-

Self‑Healing (Auto‑Retry) – When the circuit is OPEN, new orders are marked QUEUED and sent to Amazon SQS.

- A “Retry Handler” Lambda listens to the queue, waits (e.g., 30 s), then re‑submits the order to the state machine.

- If the backend is fixed, the order processes; otherwise it returns to the queue.

Low‑Level Diagram

Tested Data Scenarios

Success

Chaos Mode

Observability & Monitoring

- Integrated Grafana Cloud (Loki) to ingest logs from CloudWatch.

- Streamlit Dashboard shows live order status (PENDING → COMPLETED or FAILED).

- Grafana Explore enables deep log searches, e.g.,

{service="order-processor"}to locate specific stack traces.

Key Learnings & Trade‑offs

| Aspect | Insight |

|---|---|

| Complexity vs. Reliability | More moving parts (queues, state machines) increase complexity, but deliver high availability; the frontend never sees a crash. |

| “Ghost” Data | Catch blocks replace the original input with the error message. Using ResultPath preserves the original order ID, allowing database updates after a failure. |

| Cost Optimization | Standard Step Functions workflows can be expensive at scale. Switching to Express Workflows and using ARM64 (Graviton) Lambdas can reduce costs by ~40 %. |

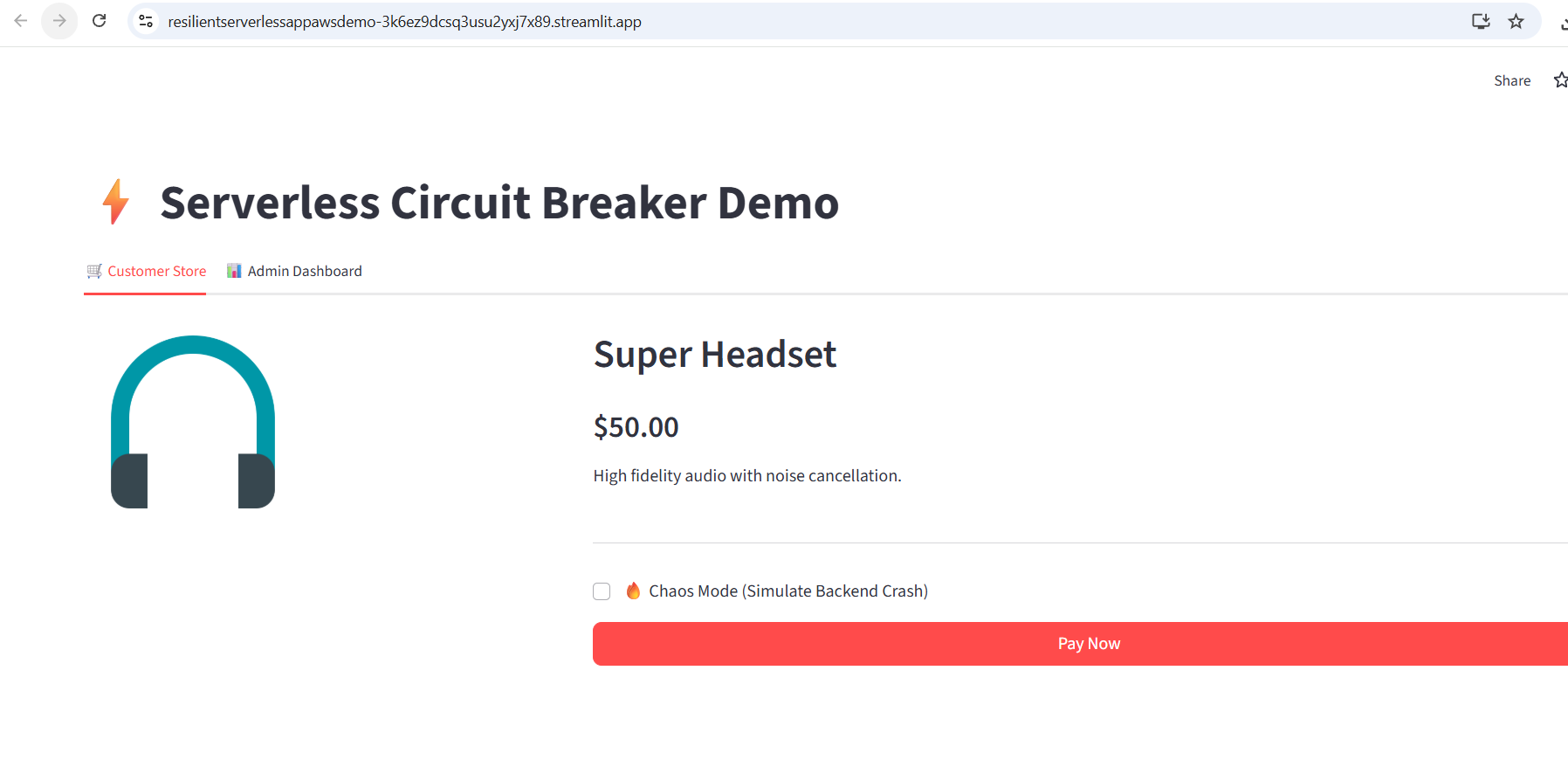

Application Screenshots

Order‑placing UI

Admin UI

Conclusion

This project demonstrates how an event‑driven architecture combined with the Circuit Breaker pattern can build systems that degrade gracefully. Instead of losing revenue during a crash, traffic is simply “paused” and processed once the storm passes.

Technologies used: AWS, Java, Python, Terraform, Grafana.