Beyond the Hype: Understanding How AI Agents Actually Work (And Why They Mirror How You Function)

Source: Dev.to

If you’ve been trying to make sense of the AI landscape lately, you’ve probably encountered a bewildering alphabet soup: LLMs, RAG, AI Agents, MCP. These terms get thrown around as if everyone should just know what they mean, but most explanations make things more complicated than they need to be.

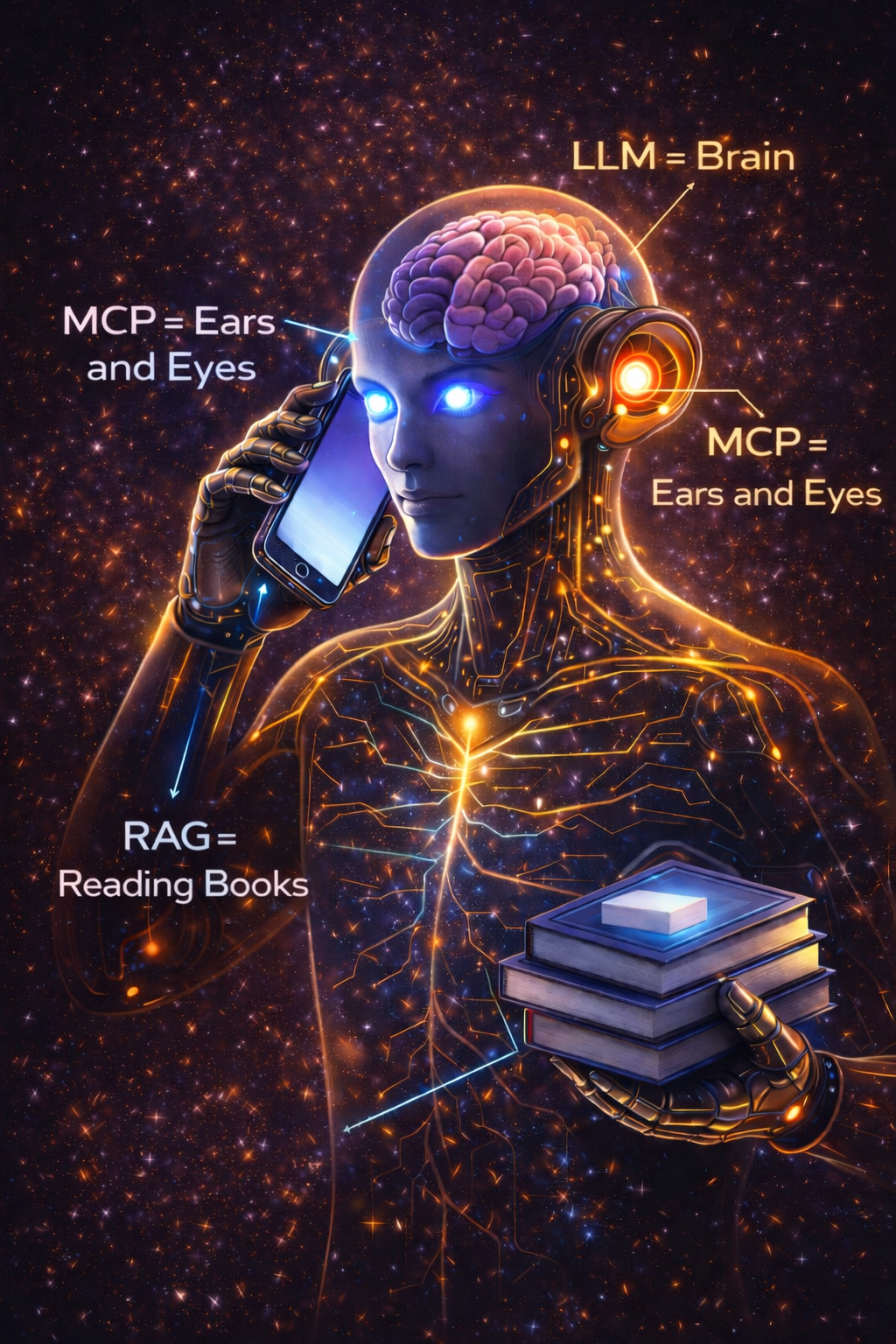

Below is a clean, human‑body analogy that ties everything together.

The Brain: Where It All Begins (LLMs)

Think of a Large Language Model (LLM) as the brain.

- It does the thinking, reasoning, and creative work.

- Like your brain, it only knows what it has absorbed during training.

Strength: Powerful reasoning, analysis, generation.

Limitation: Fixed knowledge base – it can’t know anything that happened after its training cut‑off.

Ask it about something that happened last week and it’s clueless, just as you’d be about a conversation you missed.

Accessing and Adding Knowledge: RAG Gives the Brain Eyes to Read

Now imagine giving that brain access to a massive library. That’s what Retrieval‑Augmented Generation (RAG) does.

- RAG searches external documents, databases, or knowledge bases for relevant information.

- The retrieved content is fed to the LLM, which then incorporates it into its response.

Result: The brain can read while it thinks. It still uses its own knowledge, but it can also reference up‑to‑date material (company docs, news articles, technical manuals, etc.).

Taking Action: AI Agents Use Tools (Ears, Eyes, Hands)

A brain that can read is great, but what if it could also do things?

AI Agents are the brain equipped with tools:

| Capability | Example |

|---|---|

| Planning & Decision‑making | Choose the next step in a workflow |

| Tool Use | Access calendars, run code, send emails |

| Task Execution | Schedule meetings, generate reports, trigger APIs |

Agents move beyond answering questions; they complete tasks, make decisions, and interact with other systems.

The Senses: MCP Connects You to the External World

All the components above are useless if they can’t sense and interact with the world in real time. Enter MCP – Model Context Protocol.

- Acts like hearing, seeing, and communicating with external systems.

- Provides live context: Slack messages, calendar events, real‑time stock prices, weather updates, database changes, etc.

With MCP: The AI has real‑time awareness beyond its static training data.

Without MCP: The AI is like a person locked in a quiet room—smart, but cut off from what’s happening now.

Why This Matters to You

| Component | What You Gain |

|---|---|

| LLM | Powerful reasoning, but limited to training data |

| RAG | Ability to pull in your own knowledge without retraining |

| AI Agents | Automation of whole workflows, not just Q&A |

| MCP | Real‑time connection to the tools and data you use daily |

The human‑body analogy isn’t perfect, but it captures the essential point: modern AI systems work best when all the pieces work together.

- A brain without tools can only think.

- Tools without a brain can’t accomplish anything meaningful.

- And all of it is useless if you can’t hear, see, or communicate with the world around you.

When you combine them, you get an AI that can actually help you do meaningful work.

The Bottom Line

We’re no longer building smarter chatbots; we’re building coherent AI systems that mirror how humans think, read, act, and sense the world.

Image (optional)

We actually function thinking, learning, acting, and connecting it all together.

The next time someone throws around terms like “RAG pipeline” or “agentic workflows,” you’ll know exactly what they’re talking about. More importantly, you’ll understand how to actually use these technologies to solve real problems.

Because at the end of the day, the best technology isn’t the most complex—it’s the kind that makes sense, the kind that works the way we do.

And that’s exactly what we’re building.

Thanks,

Sreeni Ramadorai