Auto-grading decade-old Hacker News discussions with hindsight

Source: Hacker News

Overview

Yesterday I stumbled on this HN thread — Show HN: Gemini Pro 3 hallucinates the HN front page 10 years from now — where Gemini 3 was hallucinating the front page of 10 years from now. One comment linked to the HN front page from exactly 10 years ago. While reading those discussions I realized an LLM could grade them for prescience far more efficiently than I could manually. Using the newly released Opus 4.5 I built a pipeline that fetches every HN front‑page article from December 2015, runs a hindsight analysis with ChatGPT 5.1 Thinking, and renders the results as static HTML.

The repository is on GitHub: .

Why this exercise is interesting

Training forward‑future predictors

Predicting the future can be treated as a serious, trainable skill. With enough historical data and feedback loops, we can improve our forecasting abilities—something “superforecasters” already advocate.

Future LLMs are watching

Everything we do today may be scrutinized in detail by future, cheap‑to‑run intelligence. Implicit “security by obscurity” assumptions become fragile when perfect reconstruction and synthesis become possible. Better to act responsibly now.

Implementation

Data collection

- Given a date, download the front page (≈30 articles).

- For each article, fetch the article content and the full comment thread via the Algolia API.

- Package everything into a markdown prompt for analysis.

Prompt used for ChatGPT 5.1 Thinking

The following is an article that appeared on Hacker News 10 years ago, and the discussion thread.

Let's use our benefit of hindsight now in 6 sections:

1. Give a brief summary of the article and the discussion thread.

2. What ended up happening to this topic? (research the topic briefly and write a summary)

3. Give out awards for "Most prescient" and "Most wrong" comments, considering what happened.

4. Mention any other fun or notable aspects of the article or discussion.

5. Give out grades to specific people for their comments, considering what happened.

6. At the end, give a final score (from 0-10) for how interesting this article and its retrospect analysis was.

As for the format of Section 5, use the header "Final grades" and follow it with an unordered list in the format:

- name: grade (optional comment)

Example:

Final grades

- speckx: A+ (excellent predictions on …)

- tosh: A (correctly predicted this or that …)

- keepamovin: A

- bgwalter: D

- fsflover: F (completely wrong on …)

For Section 6, use the prefix:

Article hindsight analysis interestingness score: Processing

- Submit the prompt to GPT 5.1 Thinking via the OpenAI API.

- Parse the returned markdown.

- Render the results into static HTML pages for easy browsing.

Rendering and hosting

The generated pages are hosted at .

All intermediate data files are available as data.zip under the same URL prefix (intentionally avoiding a direct link).

Examples

A few highlight pages from December 2015:

- December 3 2015 – Swift went open source

- December 6 2015 – Launch of Figma

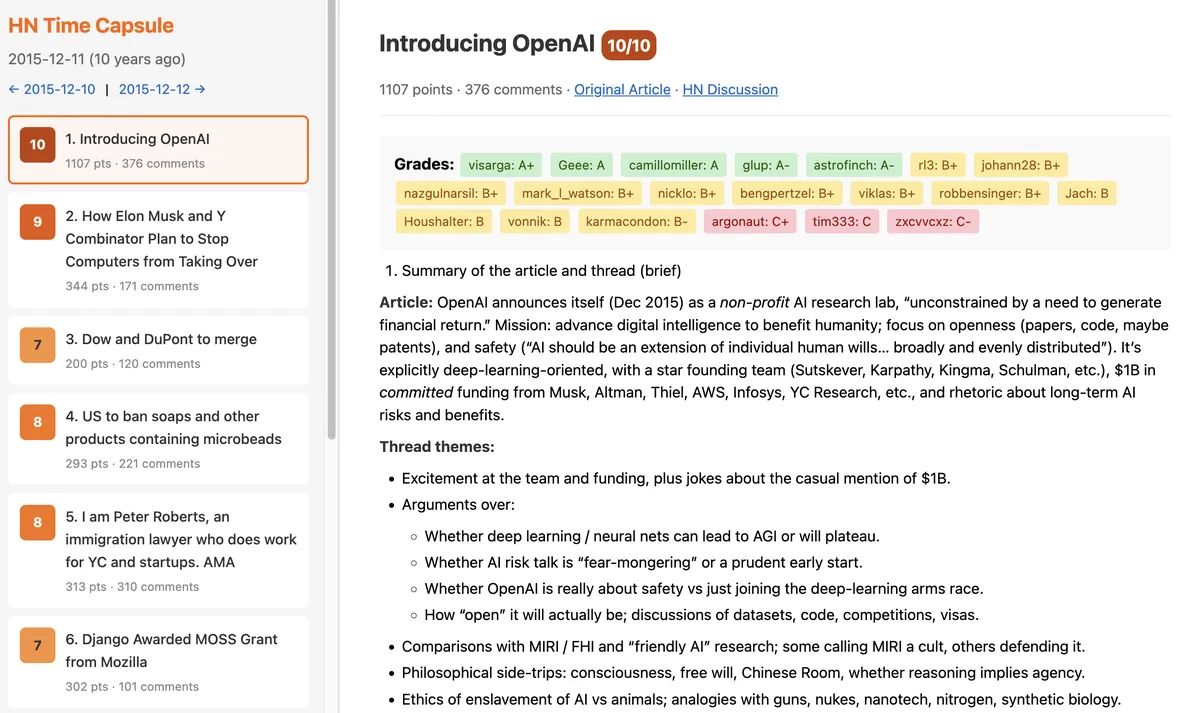

- December 11 2015 – Original announcement of OpenAI

- December 16 2015 – geohot is building Comma

- December 22 2015 – SpaceX launch webcast: Orbcomm‑2 Mission

- December 28 2015 – Theranos struggles

Hall of Fame

The Hall of Fame page () ranks the top commenters of December 2015 by an IMDb‑style grade‑point average. Notable names include:

- pcwalton

- tptacek

- paulmd

- cstross

- greglindahl

- moxie

- hannob

- 0xcde4c3db

- Manishearth

- johncolanduoni

GPT 5.1 Thinking flagged many of their comments as especially insightful and prescient.

Cost and performance

Processing 31 days × 30 articles = 930 LLM queries cost about $58 and took roughly 1 hour of compute time. Future models will likely make this kind of retrospective analysis even cheaper and faster.

I hope people enjoy exploring the results. The code (and the Opus 4.5 scripts) are open‑source, so anyone can reproduce or extend the analysis.