Why Most A/B Tests Fail in Subscription Apps

Source: Dev.to

If you build subscription apps long enough, you eventually run into a frustrating moment:

You run an A/B test.

You wait two weeks.

The result is… inconclusive. Or worse—misleading.

As a founder and operator working on subscription products, I’ve seen this happen repeatedly. Teams invest time into A/B testing, but revenue barely moves. Confidence drops. Testing slows down. Eventually, A/B testing becomes something the team talks about, not something they trust.

After analyzing hundreds of subscription apps and paywall iterations—and making plenty of mistakes myself—I’ve learned a hard truth:

Most A/B tests don’t fail because of bad execution.

They fail because they were never designed to answer the right question.

This article is the first post in my Weekly Growth Tactics series.

Let’s start by fixing how we think about A/B testing in subscription apps.

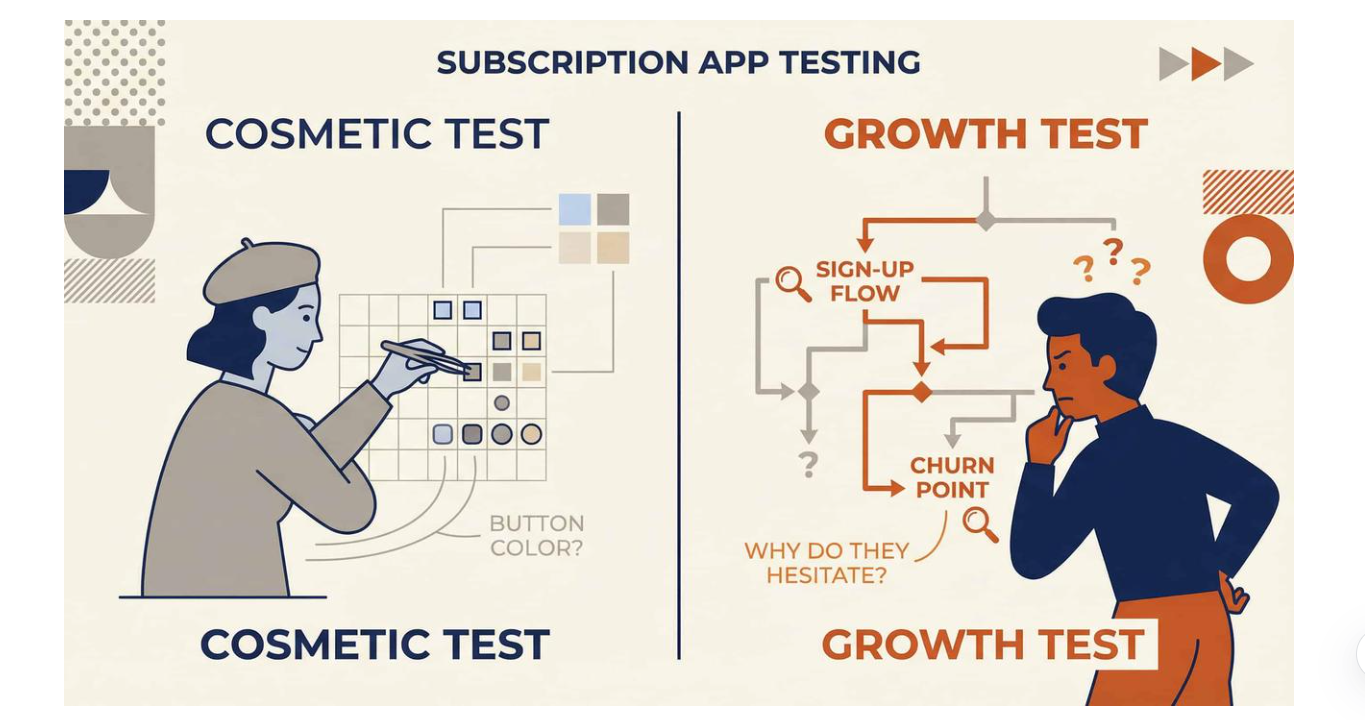

The Early Mistake I Made (And Many Teams Still Make)

At first, I thought A/B testing was simple.

- Change a button color.

- Move the price higher.

- Add a discount badge.

Wait for conversion to go up.

Sometimes it worked. Often, it didn’t. And even when it did work, I couldn’t confidently explain why.

That’s when I realized something uncomfortable:

Most subscription A/B tests are cosmetic experiments, not growth experiments.

They optimize pixels—not decisions.

Why Subscription A/B Tests Are Especially Easy to Get Wrong

Subscription products are fundamentally different from one‑time‑purchase apps. When users subscribe, they’re not just buying a feature—they’re committing to:

- Repeated payments

- Habit change

- Long‑term trust

This makes subscription funnels fragile in ways many teams underestimate.

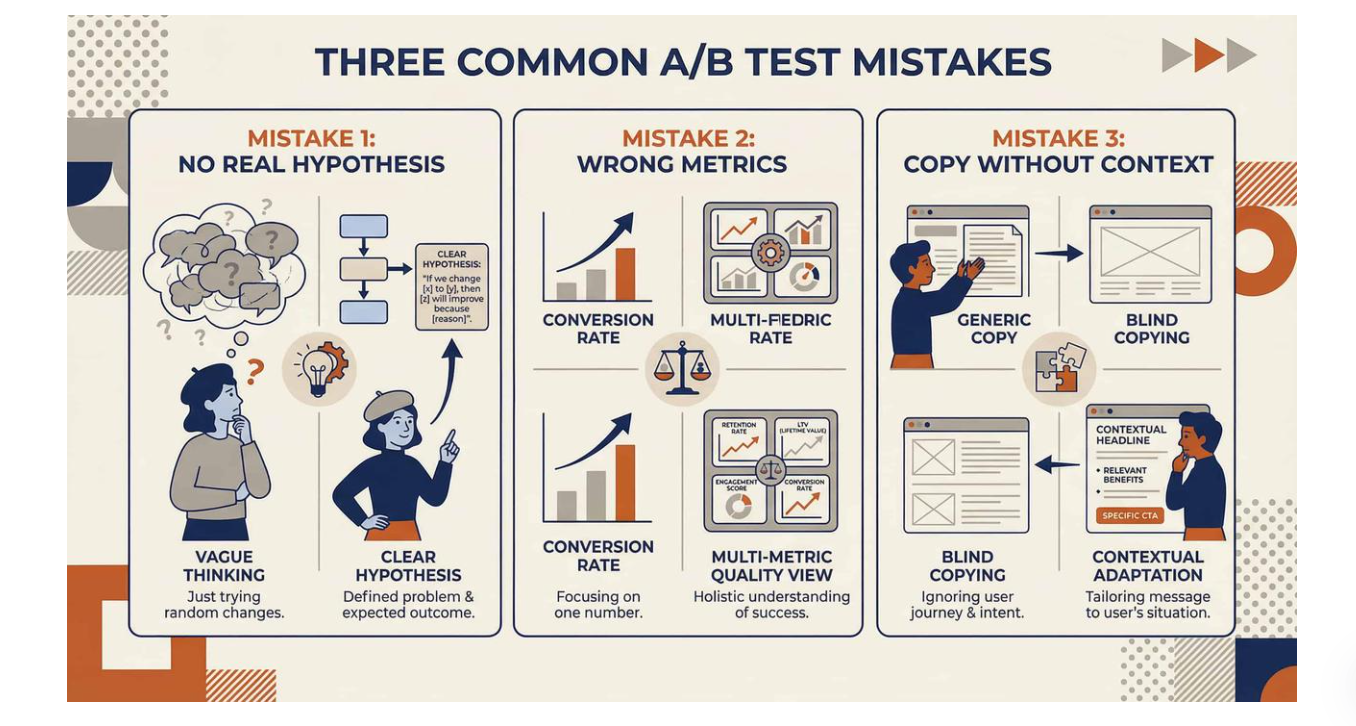

1. Teams Test Without a Real Hypothesis

Many tests start with:

“Let’s see if this converts better.”

That’s not a hypothesis. That’s curiosity.

A real hypothesis sounds like:

“If we show value before price, users who are unsure will feel safer starting a trial.”

Without this clarity, you can’t learn—only observe noise.

Teams Measure the Wrong Metric

Conversion rate alone is a trap. A paywall variant might:

- Increase trial starts

- Decrease paid retention

Resulting in lower ARPU after 30 days. Short‑term wins often hide long‑term losses. In subscription apps, revenue quality matters more than raw conversion.

2. Teams Copy Without Context

This is extremely common.

You see a top app using:

- Annual plan default

- Aggressive urgency

- Emotional headlines

So you copy it.

What’s missing? Context:

- Country

- Traffic source

- Product maturity

- User intent

The same pattern can outperform in one app and completely fail in another.

What High‑Performing Subscription Teams Do Differently

After studying how successful teams iterate on paywalls, onboarding, and pricing, a clear pattern emerges: they don’t treat A/B testing as a tactic; they treat it as a system.

A Better A/B Testing Framework for Subscription Apps

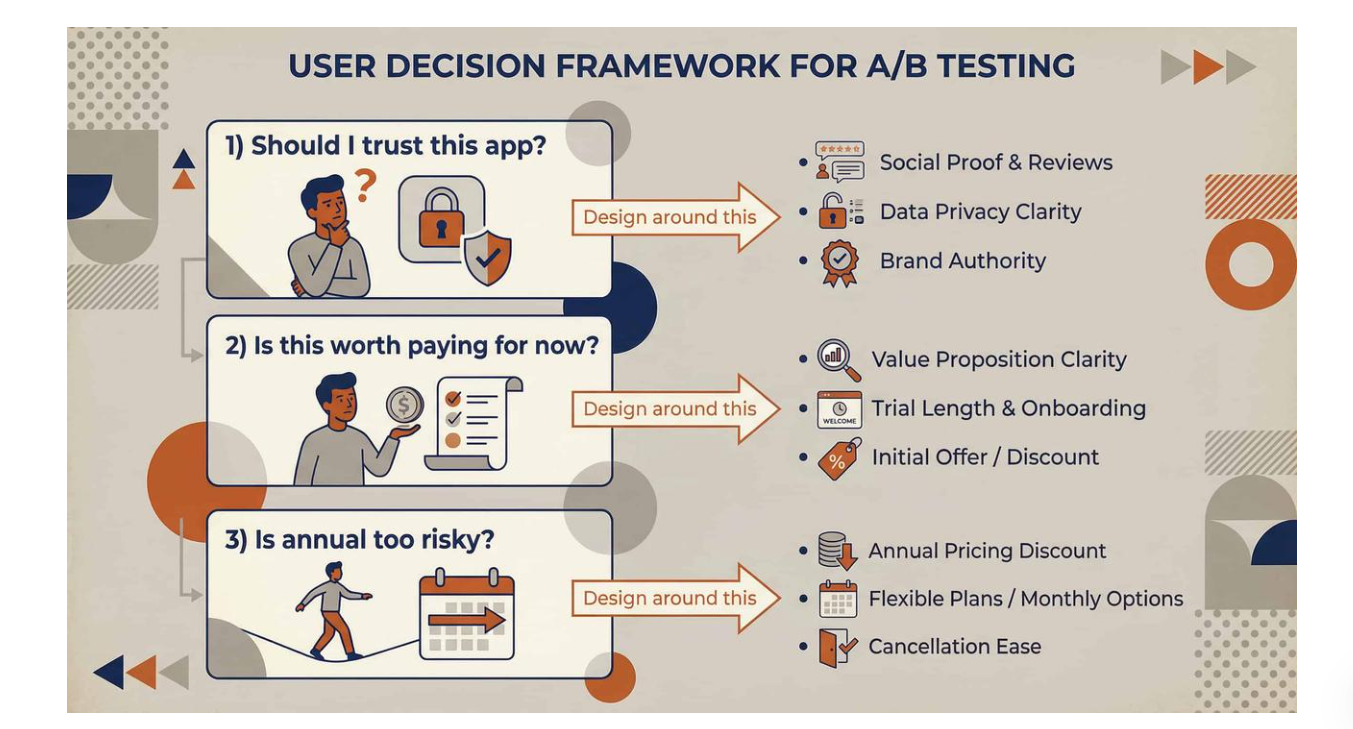

1. Start With the User Decision, Not the UI

Every meaningful A/B test should map to a decision moment:

- “Should I trust this app?”

- “Is this worth paying for now?”

- “Is annual too risky?”

Instead of asking “Which layout converts better?”

Ask “What doubt is blocking this user from committing?”

Design your variants around removing that doubt.

2. Test Fewer Things — But Deeper

Great subscription teams don’t run 20 shallow tests. They run fewer tests that touch:

- Pricing framing

- Value communication

- Risk perception

- Commitment timing

One well‑designed test can outperform ten random tweaks.

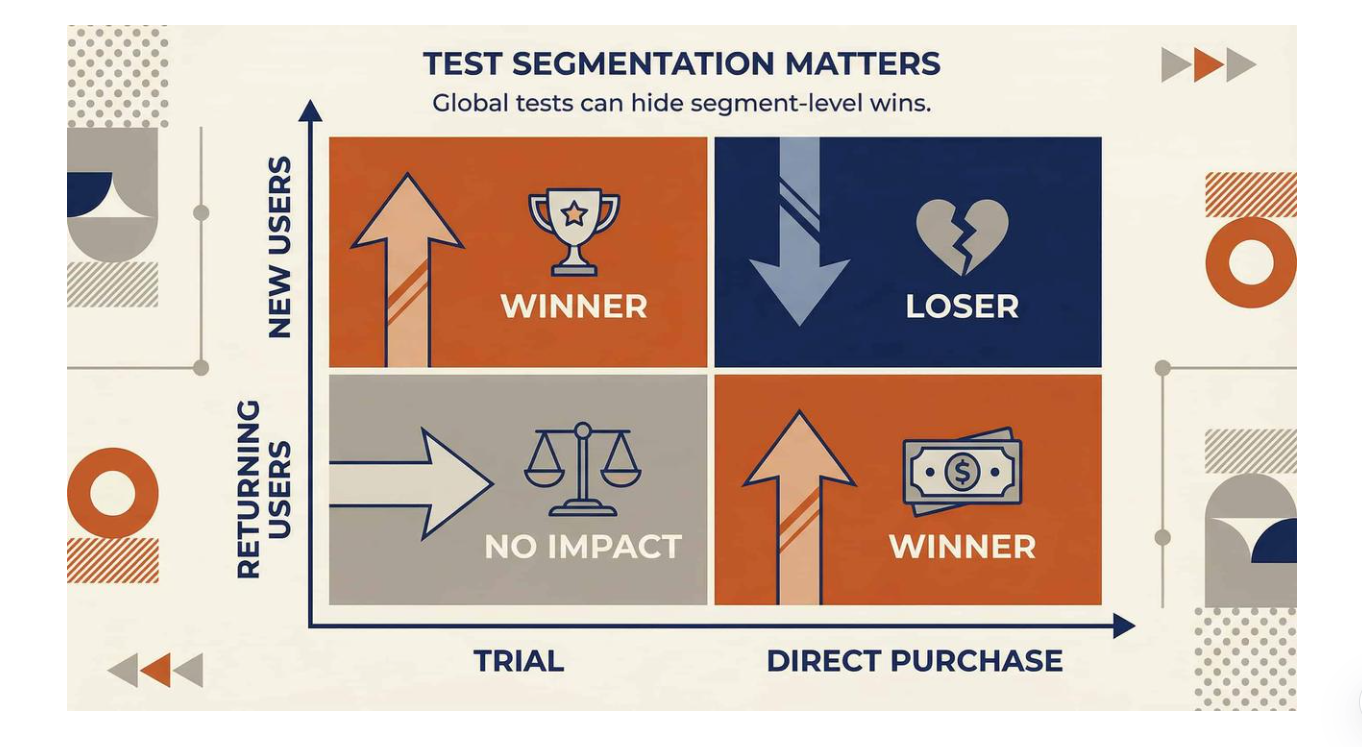

3. Segment Before You Test

A/B testing without segmentation is dangerous.

At minimum, separate:

- New users vs. returning users

- Trial users vs. direct‑purchase users

- High‑intent vs. low‑intent traffic

A variant that “loses” globally might win massively for one segment.

4. Accept That Some Tests Are Directional

Not every test needs statistical perfection. Some tests exist to:

- Validate intuition

- Kill bad ideas early

- Narrow future experiments

Waiting for perfect certainty often kills momentum.

The Hidden Cost of Bad A/B Testing

Bad tests don’t just waste time. They:

- Teach the team wrong lessons

- Reduce trust in data

(Continue the article…)

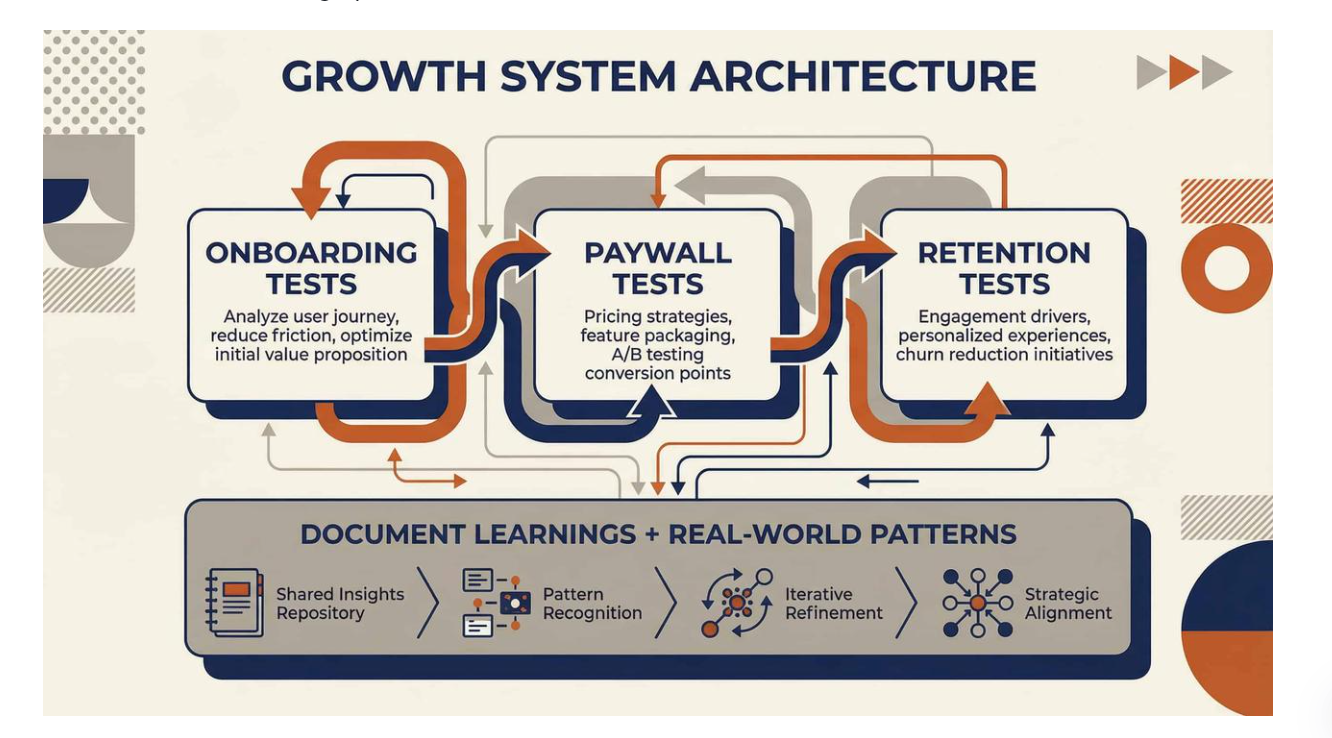

From Isolated Tests to a Growth System

The best subscription teams do three things consistently:

- Document learnings, not just results

- Connect tests across onboarding → paywall → retention

- Study real‑world patterns, not just their own app

This is where industry‑level observation becomes powerful. By analyzing how hundreds of subscription apps evolve their paywalls and pricing over time, you start to see:

- What actually moves revenue

- What only works in specific contexts

- What most teams copy incorrectly

Patterns emerge long before dashboards tell you the full story.

Final Thought: A/B Testing Is a Means, Not the Goal

A/B testing won’t save a broken product.

It won’t replace clear value.

It won’t fix weak positioning.

But when done right, it becomes a compass—not a dice roll.

If you’re building a subscription app, stop asking:

“What should we test next?”

Start asking:

“What decision are we trying to improve?”

That shift alone will change your results.

Want to Learn From Real Subscription Experiments?

These insights come from analyzing thousands of real paywalls, onboarding flows, and pricing changes across subscription apps.

If you want to see how top apps actually test, iterate, and evolve their monetization strategies, PaywallPro gives you access to real‑world patterns—not guesses.

Explore how subscription apps really grow at PaywallPro.

This is just the beginning of weekly growth tactics. More experiments coming next week.