Spinlocks vs. Mutexes: When to Spin and When to Sleep

Source: Hacker News

Introduction

You’re staring at perf top showing 60 % CPU time in pthread_mutex_lock. Your latency is terrible. Someone suggests “just use a spinlock,” and suddenly your 16‑core server is pegged at 100 % doing nothing useful. This is the synchronization‑primitive trap: engineers often choose the wrong primitive because they don’t understand when each makes sense.

Spinlocks vs. Mutexes

| Property | Mutex | Spinlock |

|---|---|---|

| Behavior | Sleeps when contention occurs. | Busy‑waits (spins) in userspace. |

| Cost when uncontended | 25–50 ns (fast path) | One atomic LOCK CMPXCHG (≈40–80 ns). |

| Cost when contended | Syscall (futex(FUTEX_WAIT)) ≈ 500 ns + context switch (3–5 µs). | 100 % CPU while looping; each failed attempt bounces the cache line between cores. |

| Preemptible contexts | Safe – thread can be descheduled. | Dangerous – if the holder is preempted, other threads waste a full timeslice. |

| Priority inversion | Solved with Priority‑Inheritance (PI) mutexes. | Cannot be solved; high‑priority thread may spin forever while low‑priority holder never runs. |

| False sharing | Same issue – every atomic invalidates the cache line. | Same issue – extra care needed to align locks. |

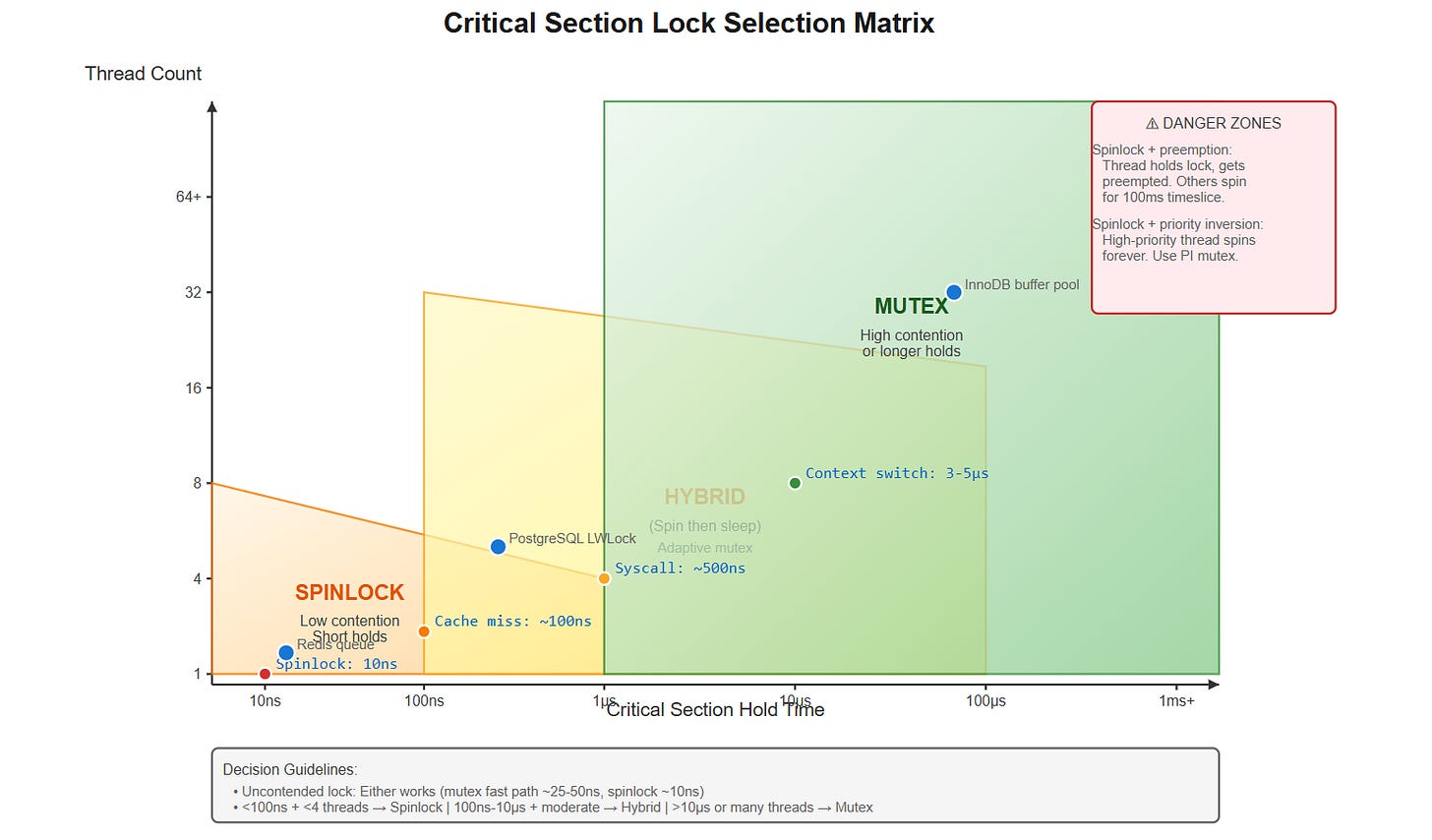

When to Use Which Primitive

| Critical‑section duration | Contention level | Recommended primitive |

|---|---|---|

| ** 10 µs** or high contention | High | Regular mutex – let the scheduler handle sleeping/waking. |

| Real‑time requirements | Any | Priority‑Inheritance mutex on a PREEMPT_RT kernel. |

Profiling Tips

-

CPU vs. context switches – Run:

perf stat -e context-switches,cache-misses- High context‑switch count with low CPU usage → mutex overhead may dominate.

- High cache‑misses with 100 % CPU → lock contention / false sharing.

-

Syscall count – Use

strace -cto countfutex()calls. Millions per second indicate a hot contended lock that might benefit from sharding or lock‑free techniques. -

Voluntary vs. involuntary switches – Check

/proc//status. Involuntary switches while holding a spinlock suggest preemption problems.

Real‑World Examples

-

Redis – Uses spinlocks for its tiny job queue (critical sections).

#include <pthread.h> #include <stdatomic.h> #define NUM_THREADS 4 #define ITERATIONS 1000000 #define HOLD_TIME_NS 100 // simulated work typedef struct { atomic_int lock; long counter; } spinlock_t; static void spinlock_acquire(spinlock_t *s) { int expected; do { expected = 0; } while (!atomic_compare_exchange_weak(&s->lock, &expected, 1)); } static void spinlock_release(spinlock_t *s) { atomic_store(&s->lock, 0); } static void *worker_thread(void *arg) { spinlock_t *s = (spinlock_t *)arg; for (long i = 0; i < ITERATIONS; i++) { spinlock_acquire(s); s->counter++; /* Simulate ~100 ns of work */ for (volatile int j = 0; j < HOLD_TIME_NS; j++) { // busy‑wait } spinlock_release(s); } return NULL; }

Illustrations