Debugging Node.js Out-of-Memory Crashes: A Practical, Step-by-Step Story

Source: Dev.to

How we tracked down a subtle memory leak that kept taking our production servers down—and how we fixed it for good.

The OOM That Ruined a Monday Morning

Everything looked normal—until alerts started firing. One by one, our Node.js API instances were crashing with a familiar but dreaded message:

FATAL ERROR: Reached heap limit Allocation failed - JavaScript heap out of memoryThe pattern was frustratingly consistent:

- Servers ran fine for hours

- Traffic increased

- Memory climbed steadily

- Then 💥 — a crash

If you’ve ever dealt with Node.js in production, you already know what this smells like: a memory leak.

In this post, I’ll walk through exactly how we diagnosed the problem, what signals mattered most, and the simple fix that stabilized memory under heavy load.

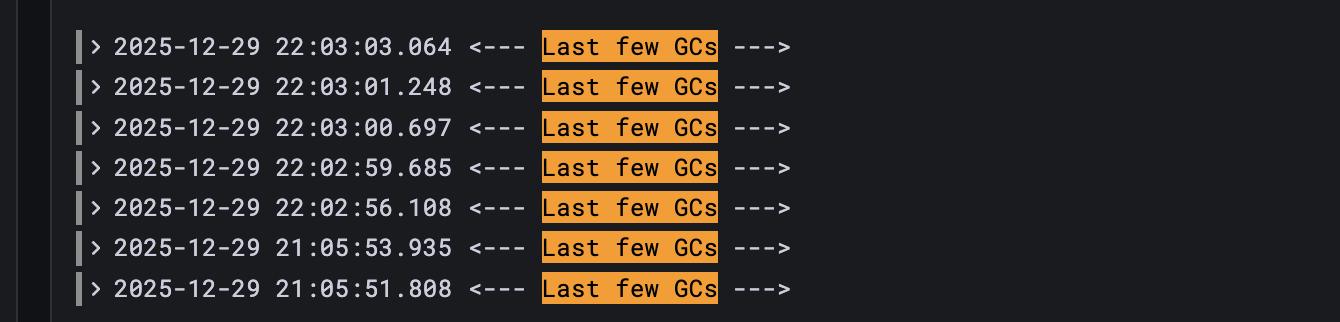

Reading the GC Tea Leaves

Before touching any code, we looked closely at the garbage‑collector output from V8:

Mark-Compact (reduce) 646.7 (648.5) -> 646.6 (648.2) MBAt first glance, it looks harmless. But the key insight was this:

GC freed almost nothing.

From ~646.7 MB to ~646.6 MB – essentially zero.

What that tells us

- GC is running frequently and expensively.

- Objects are still strongly referenced.

- Memory is not eligible for collection.

In short: this is not “GC being slow”—this is leaked or over‑allocated memory.

Preparing the Battlefield

1. Confirm the Heap Limit

First, verify how much memory Node.js is actually allowed to use:

const v8 = require('v8');

console.log(

'Heap limit:',

Math.round(v8.getHeapStatistics().heap_size_limit / 1024 / 1024),

'MB'

);This removes guesswork—especially important in containers or cloud runtimes.

2. Turn on GC Tracing

Watch GC behavior in real time:

node --trace-gc server.jsYou’ll see:

- Scavenge → minor GC (young objects)

- Mark‑Sweep / Mark‑Compact → major GC (old generation)

Frequent major GCs with poor cleanup are a huge red flag.

3. Shrink the Heap (On Purpose)

Instead of waiting hours for production crashes, force the issue locally:

node --max-old-space-size=128 server.jsA smaller heap makes memory problems surface fast—often in minutes.

4. Reproduce with Load

Write a simple concurrent load script to mimic real traffic. Under load, memory climbs steadily and never comes back down.

At this point, you have a reliable reproduction. Time to hunt the leak.

Load Test Script for Memory Testing

To reliably reproduce the memory‑leak issue locally (instead of waiting for real traffic) we use a tiny load‑test template. The script:

- has no external dependencies – only the built‑in

httpmodule - lets you set the concurrency from the command line

- fully consumes each response so sockets are released and memory usage is accurate

- is meant for GC / heap‑behavior investigation, not for performance benchmarking

Usage

node load-test.js [concurrent] [endpoint]

# Example – 100 parallel requests to the “data” endpoint

node load-test.js 100 dataLoad‑Test Template

/**

* Load Test Script for Memory Testing

* Usage: node load-test.js [concurrent] [endpoint]

* Example: node load-test.js 100 data

*/

const http = require('http');

const CONFIG = {

hostname: 'localhost',

port: 3000,

endpoints: {

data: {

path: '/api/data',

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

items: ['sample_item'],

userContext: {

userId: 'test-user',

sessionId: 'test-session',

},

}),

},

// Add more endpoint definitions here if needed

},

};

const CONCURRENT = parseInt(process.argv[2], 10) || 50;

const ENDPOINT = process.argv[3] || 'data';

const endpointConfig = CONFIG.endpoints[ENDPOINT];

if (!endpointConfig) {

console.error(

`Unknown endpoint: ${ENDPOINT}. Available: ${Object.keys(

CONFIG.endpoints,

).join(', ')}`,

);

process.exit(1);

}

/**

* Sends a single HTTP request and resolves with a result object.

* @param {number} requestId – Identifier for logging/debugging.

* @returns {Promise<Object>}

*/

const makeRequest = (requestId) => {

return new Promise((resolve) => {

const startTime = Date.now();

const options = {

hostname: CONFIG.hostname,

port: CONFIG.port,

path: endpointConfig.path,

method: endpointConfig.method,

headers: endpointConfig.headers,

};

const req = http.request(options, (res) => {

// Consume the response body so the socket can be reused.

res.resume();

res.on('end', () => {

const success = res.statusCode >= 200 && res.statusCode < 300;

resolve({

requestId,

success,

status: res.statusCode,

duration: Date.now() - startTime,

});

});

});

// Handle network / request errors.

req.on('error', (err) => {

resolve({

requestId,

success: false,

duration: Date.now() - startTime,

error: err.message,

});

});

// Send the request body (if any) and finish the request.

if (endpointConfig.body) {

req.write(endpointConfig.body);

}

req.end();

});

};

/**

* Fires `CONCURRENT` requests in parallel and prints a short summary.

*/

const runLoadTest = async () => {

const promises = [];

for (let i = 0; i < CONCURRENT; i++) {

promises.push(makeRequest(i));

}

const results = await Promise.all(promises);

const successes = results.filter((r) => r.success).length;

const failures = results.length - successes;

console.log(`✅ Completed ${results.length} requests`);

console.log(` • succeeded: ${successes}`);

console.log(` • failed : ${failures}`);

};

runLoadTest().catch((err) => {

console.error('Unexpected error:', err);

process.exit(1);

});With this script in place you can quickly spin up a controlled load, attach any instrumentation you need (e.g., node --inspect, clinic heapprofile, custom process.memoryUsage() logs), and pinpoint the code path that was retaining references longer than necessary. In the original case the culprit turned out to be a stale cached object; once it was cleared, the garbage collector reclaimed memory as expected and the production crashes disappeared.

MEMORY LOAD TEST

// Timeout handling (example)

setTimeout(() => {

req.destroy();

resolve({

requestId,

success: false,

duration: Date.now() - startTime,

error: 'Timeout',

});

}, 30000);

if (endpointConfig.body) {

req.write(endpointConfig.body);

}

req.end();const runLoadTest = async () => {

console.log('='.repeat(60));

console.log('MEMORY LOAD TEST');

console.log('='.repeat(60));

console.log(`Endpoint: ${endpointConfig.method} ${endpointConfig.path}`);

console.log(`Concurrent Requests: ${CONCURRENT}`);

console.log(`Target: ${CONFIG.hostname}:${CONFIG.port}`);

console.log('='.repeat(60));

console.log('\nStarting load test...\n');

const startTime = Date.now();

const promises = Array.from({ length: CONCURRENT }, (_, i) =>

makeRequest(i + 1)

);

const results = await Promise.all(promises);

const totalTime = Date.now() - startTime;

const successful = results.filter((r) => r.success);

const failed = results.filter((r) => !r.success);

const durations = successful.map((r) => r.duration);

const avgDuration = durations.length

? Math.round(durations.reduce((a, b) => a + b, 0) / durations.length)

: 0;

console.log('='.repeat(60));

console.log('RESULTS');

console.log('='.repeat(60));

console.log(`Total Requests: ${CONCURRENT}`);

console.log(`Successful: ${successful.length}`);

console.log(`Failed: ${failed.length}`);

console.log(`Total Time: ${totalTime} ms`);

console.log(`Avg Response Time: ${avgDuration} ms`);

console.log(`Min Response Time: ${Math.min(...durations)} ms`);

console.log(`Max Response Time: ${Math.max(...durations)} ms`);

console.log(

`Requests/sec: ${Math.round(CONCURRENT / (totalTime / 1000))}`

);

console.log('='.repeat(60));

if (failed.length) {

console.log('\nFailed requests:');

failed.slice(0, 5).forEach((r) => {

console.log(` Request #${r.requestId}: ${r.error}`);

});

}

console.log('\n>>> Check server logs for [MEM] entries <<<');

};

const logMemory = (label) => {

const { heapUsed } = process.memoryUsage();

console.log(`[MEM] ${label}: ${Math.round(heapUsed / 1024 / 1024)} MB`);

};Observed Log Output

Under load, the logs told a clear story:

processData START: 85 MB

processData START: 92 MB

processData START: 99 MB

processData START: 107 MBMemory kept climbing—request after request. Eventually, all roads led to one innocent‑looking helper function.

The Real Culprit

const getItemAssets = (itemType) => {

const assetConfig = {

item_a: { thumbnail: '...', full: '...' },

item_b: { thumbnail: '...', full: '...' },

// many more entries

};

return assetConfig[itemType] || { thumbnail: '', full: '' };

};Why This Was Disastrous

- The config object was recreated on every call.

Each invocation builds a freshassetConfigobject, causing unnecessary allocations. - The function ran multiple times per request.

Re‑executing the same logic repeatedly amplifies the allocation cost. - Under concurrency, tens of thousands of objects were created per second.

Even though the garbage collector can eventually clean them up, allocation outpaces reclamation, pushing objects into the old generation and eventually exhausting the heap.

The Fix: One Small Move, Huge Impact

// Asset configuration – created once and frozen

const ASSET_CONFIG = Object.freeze({

item_a: { thumbnail: '...', full: '...' },

item_b: { thumbnail: '...', full: '...' },

// …other items

});

// Fallback for unknown item types

const DEFAULT_ASSET = Object.freeze({ thumbnail: '', full: '' });

/**

* Returns the assets for the given item type.

* If the type is not found, the default (empty) asset is returned.

*

* @param {string} itemType – key from ASSET_CONFIG

* @returns {{thumbnail:string, full:string}}

*/

const getItemAssets = (itemType) => ASSET_CONFIG[itemType] || DEFAULT_ASSET;What changed?

- Objects are created once, not on every request.

- Zero new allocations in the hot path.

- GC pressure is dramatically reduced.

Proving the Fix Worked

We reran the exact same test.

Before

- Heap climbed relentlessly.

- GC freed almost nothing.

- Process crashed at ~128 MB.

After

- Heap usage oscillated within a tight range.

- Minor GCs cleaned memory efficiently.

- No crashes—even under sustained load.

Final Thought

Most Node.js OOM crashes aren’t caused by “huge data” or “bad GC.”

They’re caused by small, repeated allocations in the wrong place.

Once you learn to read GC logs and control allocation rate, memory bugs stop being mysterious—and start being fixable.