Agent Factory Recap: A Deep Dive into Agent Evaluation, Practical Tooling, and Multi-Agent Systems

Source: Dev.to

How do you know if your agent is actually working?

It’s one of the most complex but critical questions in development. In our latest episode of the Agent Factory podcast, we dedicated the entire session to breaking down the world of agent evaluation. We’ll cover what agent evaluation really means, what you should measure, and how to measure it using ADK and Vertex AI. You’ll also learn more advanced evaluation in multi‑agent systems.

Deconstructing Agent Evaluation

We start by defining what makes agent evaluation so different from other forms of testing.

Beyond Unit Tests: Why Agent Evaluation is Different

Timestamp: [02:20]

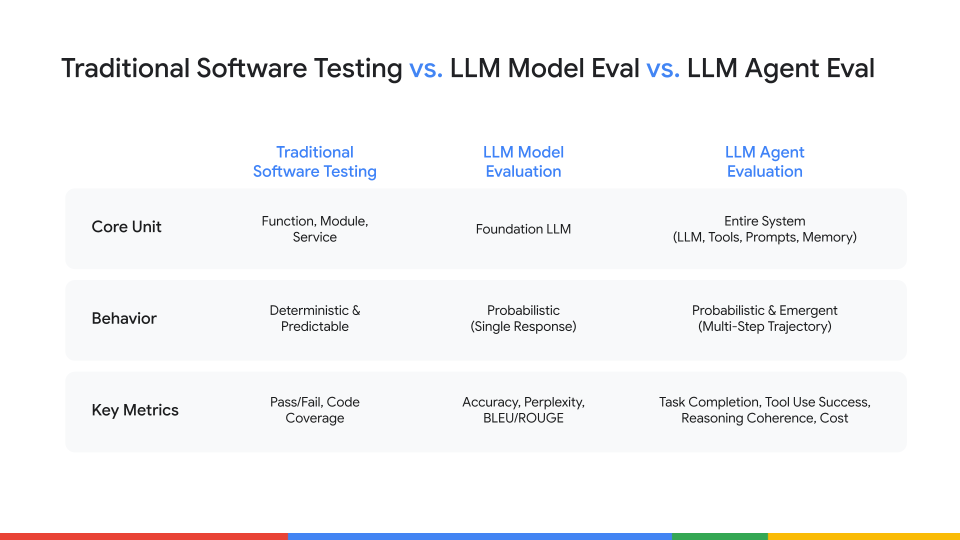

The first thing to understand is that evaluating an agent isn’t like traditional software testing.

- Traditional software tests are deterministic; you expect the same input to produce the same output every time (A always equals B).

- LLM evaluation is like a school exam. It tests static knowledge with Q&A pairs to see if a model “knows” things.

- Agent evaluation, on the other hand, is more like a job performance review. We’re not just checking a final answer; we’re assessing a complex system’s behavior—including its autonomy, reasoning, tool use, and ability to handle unpredictable situations. Because agents are non‑deterministic, the same prompt can yield two different—but equally valid—outcomes.

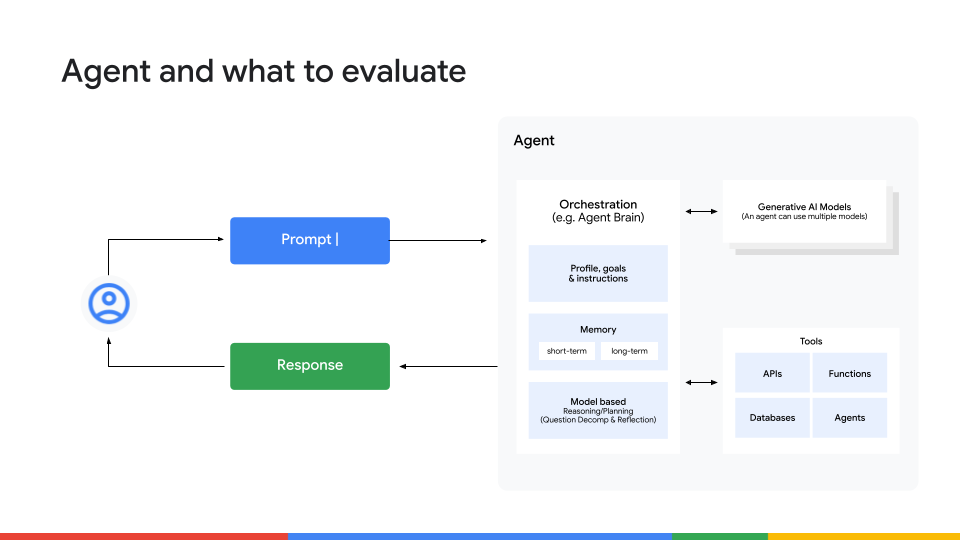

A Full‑Stack Approach: What to Measure

Timestamp: [04:15]

If we’re not just looking at the final output, what should we be measuring? The short answer is everything. We need a full‑stack approach that looks at four key layers of the agent’s behavior:

- Final Outcome – Did the agent achieve its goal? Beyond a simple pass/fail, consider quality, coherence, accuracy, safety, and hallucination avoidance.

- Chain of Thought (Reasoning) – How did the agent arrive at its answer? Verify that it broke the task into logical steps and that its reasoning is consistent. An agent that gets the right answer by luck isn’t reliable.

- Tool Utilization – Did the agent pick the right tool and pass the correct parameters? Was it efficient? Agents can get stuck in costly, redundant API‑call loops—this is where you catch that.

- Memory & Context Retention – Can the agent recall information from earlier in the conversation when needed? If new information conflicts with existing knowledge, can it resolve the conflict correctly?

How to Measure: Ground Truth, LLM‑as‑a‑Judge, and Human‑in‑the‑Loop

Timestamp: [06:43]

Once you know what to measure, the next question is how. We covered three popular methods, each with its own pros and cons:

| Method | Strengths | Limitations |

|---|---|---|

| Ground Truth Checks | Fast, cheap, reliable for objective measures (e.g., “Is this a valid JSON?” or “Does the format match the schema?”) | Can’t capture nuance or subjective quality |

| LLM‑as‑a‑Judge | Scales well; can score subjective qualities like plan coherence | Judgments inherit the model’s training biases and may be inconsistent |

| Human‑in‑the‑Loop | Gold‑standard accuracy; captures nuance and domain expertise | Slow and expensive |

Key takeaway: Don’t rely on a single method. Combine them in a calibration loop:

- Use human experts to create a small, high‑quality “golden dataset.”

- Fine‑tune an LLM‑as‑a‑judge on that dataset until its scores align with human reviewers.

- Deploy the calibrated judge for large‑scale automated evaluation.

This gives you human‑level accuracy at an automated scale.

The Factory Floor: Evaluating an Agent in 5 Steps

The Factory Floor segment moves from high‑level concepts to a practical demo using the Agent Development Kit (ADK).

Hands‑On: A 5‑Step Agent Evaluation Loop with ADK

Timestamp: [08:41]

The ADK Web UI is perfect for fast, interactive testing during development. We walked through a five‑step “inner loop” workflow to debug a simple product‑research agent that was using the wrong tool.

-

Test and Define the “Golden Path.”

- Prompt: “Tell me about the A‑phones.”

- The agent returned the wrong information (an internal SKU instead of a customer description).

- We corrected the response in the Eval tab to create our first “golden” test case.

(Continue the remaining four steps in the same concise, bullet‑point style as needed.)

Evaluation Workflow (with Screenshots)

1. Evaluate and Identify Failure

With the test case saved, we ran the evaluation. As expected, it failed immediately.

2. Find the Root Cause

We opened the Trace view, which shows the agent’s step‑by‑step reasoning process. It instantly became clear that the agent chose the wrong tool (lookup_product_information instead of get_product_details).

3. Fix the Agent

The root cause was an ambiguous instruction. We updated the agent’s code to be explicit about which tool to use for customer‑facing requests versus internal data.

4. Validate the Fix

After the ADK server hot‑reloaded our code, we re‑ran the evaluation. This time the test passed and the agent returned the correct customer‑facing description.

From Development to Production

The ADK workflow is fantastic for development, but it doesn’t scale. For production‑grade needs you should move to a platform that can handle large‑scale evaluations.

From the Inner Loop to the Outer Loop: ADK and Vertex AI

Timestamp: 11:51

- ADK for the Inner Loop: Fast, manual, interactive debugging during development.

- Vertex AI for the Outer Loop: Run evaluations at scale with richer metrics (e.g., LLM‑as‑a‑judge). Use Vertex AI Gen AI evaluation services to handle complex, qualitative evaluations and build monitoring dashboards.

The Cold‑Start Problem: Generating Synthetic Data

Timestamp: 13:03

When you lack a dataset, generate synthetic data with a four‑step recipe:

- Generate Tasks – Prompt an LLM to create realistic user tasks.

- Create Perfect Solutions – Have an “expert” agent produce the ideal, step‑by‑step solution for each task.

- Generate Imperfect Attempts – Let a weaker or different agent try the same tasks, yielding flawed attempts.

- Score Automatically – Use an LLM‑as‑a‑judge to compare imperfect attempts against perfect solutions and assign scores.

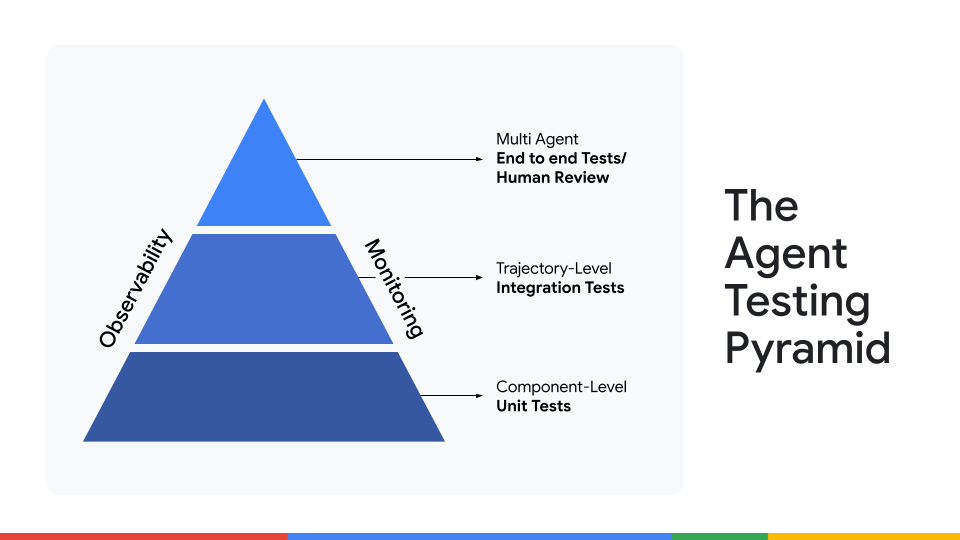

The Three‑Tier Framework for Agent Testing

Timestamp: 14:10

Once you have evaluation data, design tests that scale using three tiers:

| Tier | Purpose | Example |

|---|---|---|

| 1️⃣ Unit Tests | Test the smallest pieces in isolation. | Verify that fetch_product_price extracts the correct price from a sample input. |

| 2️⃣ Integration Tests | Evaluate the full, multi‑step journey for a single agent. | Give the agent a complete task and check that it correctly chains reasoning and tools to produce the expected outcome. |

| 3️⃣ End‑to‑End Human Review | Human experts assess final outputs for quality, nuance, and correctness. | Use a “human‑in‑the‑loop” feedback system to continuously calibrate the agent, and test interactions among multiple agents. |

The Next Frontier: Evaluating Multi‑Agent Systems

Timestamp: 15:09

As we move from single‑agent pipelines to orchestrations of many agents, evaluation must also evolve. Future work includes:

- Defining system‑level metrics (e.g., coordination efficiency, conflict resolution).

- Building automated orchestration test harnesses that can simulate complex user journeys across multiple agents.

- Extending LLM‑as‑a‑judge frameworks to assess collaborative outcomes, not just individual responses.

# Evaluating Multi‑Agent Systems

Judging an agent in isolation doesn’t tell you much about the overall system’s performance.

We used an example of a customer‑support system with two agents:

- **Agent A** – handles the initial contact and gathers the necessary information.

- **Agent B** – processes refunds.

If a customer asks for a refund, Agent A’s job is to collect the info and hand it off to Agent B.

[](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Flzrz06a21fgir4b6rhpp.jpg)

- Evaluating **Agent A** alone might give it a task‑completion score of 0 because it never issues the refund.

- In reality, it performed its job perfectly by successfully handing off the task.

- Conversely, if Agent A passes the wrong information, the whole system fails—even if Agent B’s logic is flawless.

This illustrates why, in multi‑agent systems, **end‑to‑end evaluation** matters most. We need to measure how smoothly agents hand off tasks, share context, and collaborate to achieve the final goal.

---

## Open Questions and Future Challenges

*Timestamp: [18:06](https://youtu.be/vTjlLiFgFno?si=vMiFs1mZw2Nvu67w&t=1086)*

We wrapped up by touching on some of the biggest open challenges in [agent evaluation](https://cloud.google.com/vertex-ai/generative-ai/docs/models/evaluation-agents?utm_campaign=CDR_0x6e136736_awareness_b448143685&utm_medium=external&utm_source=blog) today:

- **Cost‑Scalability Trade‑off:** Human evaluation is high‑quality but expensive; LLM‑as‑a‑judge is scalable but requires careful calibration. Finding the right balance is key.

- **Benchmark Integrity:** As models get more powerful, there’s a risk that benchmark questions leak into their training data, making scores less meaningful.

- **Evaluating Subjective Attributes:** How do you objectively measure qualities like creativity, proactivity, or humor in an agent’s output? These remain open questions the community is working to solve.

---

## Your Turn to Build

This episode was packed with concepts, but the goal was to give you a practical framework for thinking about and implementing a robust evaluation strategy. From the fast, iterative loop in the ADK to scaled‑up pipelines in Vertex AI, having the right evaluation mindset is what turns a cool prototype into a production‑ready agent.

We encourage you to **watch the full episode** to see the demos in action and start applying these principles to your own projects.

---

## Connect with Us

- **Ivan Nardini** → [LinkedIn](https://www.linkedin.com/in/ivan-nardini/) • [X](https://x.com/ivnardini)

- **Annie Wang** → [LinkedIn](https://www.linkedin.com/in/anniewangtech/) • [X](https://x.com/anniewangtech)